Detecting Face-Touch Hand Moves Using Smartwatch Inertial Sensors and Convolutional Neural Networks

DOI:

https://doi.org/10.18201/ijisae.2022.275Keywords:

COVID-19, CNN, Hand Activity Recognition, HRT, IMU, Motion Sensors, Sensor Fusion, WearablesAbstract

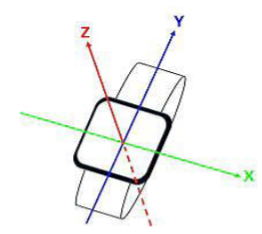

As per World Health Organization (WHO), avoiding touching the face when people are in public or crowded places is an effective way to prevent respiratory viral infections. This recommendation has become more crucial with the current health crisis and the worldwide spread of COVID-19 pandemic. However, most face touches are done unconsciously, that is why it is difficult for people to monitor their hand moves and try to avoid touching the face all the time. Hand-worn wearable devices like smartwatches are equipped with multiple sensors that can be utilized to track hand moves automatically. This work proposes a smartwatch application that uses small, efficient, and end-to-end Convolutional Neural Networks (CNN) models to classify hand motion and identify Face-Touch moves. To train the models, a large dataset is collected for both left and right hands with over 28k training samples that represents multiple hand motion types, body positions, and hand orientations. The application provides real-time feedback and alerts the user with vibration and sound whenever attempting to touch the face. Achieved results show state of the art face-touch accuracy with average recall, precision, and F1-Score of 96.75%, 95.1%, 95.85% respectively, with low False Positives Rate (FPR) as 0.04%. By using efficient configurations and small models, the app achieves high efficiency and can run for long hours without significant impact on battery which makes it applicable on most out-of-the-shelf smartwatches.

Downloads

References

Y. L. A. Kwok, J. Gralton, and M. L. McLaws, “Face touching: A frequent habit that has implications for hand hygiene,” Am. J. Infect. Control, vol. 43, no. 2, pp. 112–114, 2015, doi: 10.1016/j.ajic.2014.10.015.

D. Moazen, S. A. Sajjadi, and A. Nahapetian, “AirDraw: Leveraging Smart Watch Motion Sensors for Mobile Human Computer Interactions,” arXiv, pp. 1–5, 2017.

M. C. Kwon, G. Park, and S. Choi, “Smartwatch user interface implementation using CNN-based gesture pattern recognition,” Sensors (Switzerland), vol. 18, no. 9, pp. 1–12, 2018, doi: 10.3390/s18092997.

M. Kim, J. Cho, S. Lee, and Y. Jung, “Imu sensor-based hand gesture recognition for human-machine interfaces,” Sensors (Switzerland), vol. 19, no. 18, pp. 1–13, 2019, doi: 10.3390/s19183827.

J. Hou et al., “SignSpeaker: A real-time, high-precision smartwatch-based sign language translator,” Proc. Annu. Int. Conf. Mob. Comput. Networking, MOBICOM, 2019, doi: 10.1145/3300061.3300117.

Q. Zhang, D. Wang, R. Zhao, and Y. Yu, “MyoSign,” pp. 650–660, 2019, doi: 10.1145/3301275.3302296.

D. Ekiz et al., “Sign sentence recognition with smart watches,” 2017 25th Signal Process. Commun. Appl. Conf. SIU 2017, 2017, doi: 10.1109/SIU.2017.7960255.

S. Taghavi, F. Davari, H. T. Malazi, and A. Ali Abin, “Tennis stroke detection using inertial data of a smartwatch,” 2019 9th Int. Conf. Comput. Knowl. Eng. ICCKE 2019, no. Iccke, pp. 466–474, 2019, doi: 10.1109/ICCKE48569.2019.8964775.

A. Anand, M. Sharma, R. Srivastava, L. Kaligounder, and D. Prakash, “Wearable motion sensor based analysis of swing sports,” 2017, doi: 10.1109/ICMLA.2017.0-149.

S. S. Tabrizi, S. Pashazadeh, and V. Javani, “Comparative Study of Table Tennis Forehand Strokes Classification Using Deep Learning and SVM,” IEEE Sens. J., vol. 20, no. 22, pp. 13552–13561, 2020, doi: 10.1109/JSEN.2020.3005443.

G. Brunner, D. Melnyk, B. Sigfússon, and R. Wattenhofer, “Swimming style recognition and lap counting using a smartwatch and deep learning,” Proc. - Int. Symp. Wearable Comput. ISWC, pp. 23–31, 2019, doi: 10.1145/3341163.3347719.

G. Laput and C. Harrison, “Sensing fine-grained hand activity with smartwatches,” Conf. Hum. Factors Comput. Syst. - Proc., 2019, doi: 10.1145/3290605.3300568.

K. Kyritsis, C. Diou, and A. Delopoulos, “End-to-end Learning for Measuring in-meal Eating Behavior from a Smartwatch,” Conf. Proc. ... Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. IEEE Eng. Med. Biol. Soc. Annu. Conf., vol. 2018, pp. 5511–5514, 2018, doi: 10.1109/EMBC.2018.8513627.

J. Wu and R. Jafari, “Orientation independent activity/gesture recognition using wearable motion sensors,” IEEE Internet Things J., vol. 6, no. 2, pp. 1427–1437, 2019, doi: 10.1109/JIOT.2018.2856119.

S. Alavi, D. Arsenault, and A. Whitehead, “Quaternion-based gesture recognition using wirelesswearable motion capture sensors,” Sensors (Switzerland), vol. 16, no. 5, 2016, doi: 10.3390/s16050605.

V. Y. Senyurek, M. H. Imtiaz, P. Belsare, S. Tiffany, and E. Sazonov, “A CNN-LSTM neural network for recognition of puffing in smoking episodes using wearable sensors,” Biomed. Eng. Lett., vol. 10, no. 2, pp. 195–203, 2020, doi: 10.1007/s13534-020-00147-8.

C. Fan and F. Gao, “A New Approach for Smoking Event Detection Using a Variational Autoencoder and Neural Decision Forest,” IEEE Access, vol. 8, pp. 120835–120849, 2020, doi: 10.1109/ACCESS.2020.3006163.

H. Huang and S. Lin, “Toothbrushing monitoring using wrist watch,” Proc. 14th ACM Conf. Embed. Networked Sens. Syst. SenSys 2016, pp. 202–215, 2016, doi: 10.1145/2994551.2994563.

Y. Takayama, Y. Ichikawa, T. Kitagawa, S. Shengmei, B. Shizuki, and S. Takahashi, “Touch Position Detection on the Front of Face Using Passive High-Functional RFID Tag with Magnetic Sensor,” Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 12182 LNCS, pp. 523–531, 2020, doi: 10.1007/978-3-030-49062-1_35.

V. M. Manghisi et al., “A body tracking-based low-cost solution for monitoring workers’ hygiene best practices during pandemics,” Sensors (Switzerland), vol. 20, no. 21, pp. 1–17, 2020, doi: 10.3390/s20216149.

N. D’Aurizio, T. L. Baldi, G. Paolocci, and D. Prattichizzo, “Preventing Undesired Face-Touches with Wearable Devices and Haptic Feedback,” IEEE Access, vol. 8, pp. 139033–139043, 2020, doi: 10.1109/ACCESS.2020.3012309.

X. Chen, “FaceOff: Detecting face touching with a wrist-worn accelerometer,” arXiv, pp. 1–5, 2020.

B. Sudharsan, D. Sundaram, J. G. Breslin, and M. I. Ali, “Avoid Touching Your Face: A Hand-to-face 3D Motion Dataset (COVID-away) and Trained Models for Smartwatches,” ACM Int. Conf. Proceeding Ser., no. October, 2020, doi: 10.1145/3423423.3423433.

K. S. Bate, J. M. Malouff, E. T. Thorsteinsson, and N. Bhullar, “The efficacy of habit reversal therapy for tics, habit disorders, and stuttering: A meta-analytic review,” Clin. Psychol. Rev., vol. 31, no. 5, pp. 865–871, 2011, doi: 10.1016/j.cpr.2011.03.013.

K. Katevas, H. Haddadi, and L. Tokarchuk, “Sensing Kit: Evaluating the sensor power consumption in iOS devices,” Proc. - 12th Int. Conf. Intell. Environ. IE 2016, pp. 222–225, 2016, doi: 10.1109/IE.2016.50.

S. Mekruksavanich, A. Jitpattanakul, P. Youplao, and P. Yupapin, “Enhanced hand-oriented activity recognition based on smartwatch sensor data using LSTMs,” Symmetry (Basel)., vol. 12, no. 9, pp. 1–19, 2020, doi: 10.3390/SYM12091570.

F. J. Ordóñez and D. Roggen, “Deep convolutional and LSTM recurrent neural networks for multimodal wearable activity recognition,” Sensors (Switzerland), vol. 16, no. 1, 2016, doi: 10.3390/s16010115.

W. S. Lima, E. Souto, K. El-Khatib, R. Jalali, and J. Gama, “Human activity recognition using inertial sensors in a smartphone: An overview,” Sensors (Switzerland), vol. 19, no. 14, pp. 14–16, 2019, doi: 10.3390/s19143213.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2022 Eftal Sehirli, Abdullah Alesmaeil

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.