2D Face Emotion Recognition and Prediction Using Labelled Selective Transfer Machine and CNN Transfer Learning Techniques for Unbalanced Datasets

Keywords:

Face Emotion Recognition, LBP, LSTM, CNN, Transfer learning, Dense Net, VGG-19Abstract

Emotion recognition and prediction using facial expressions is one of the most challenging activities in the computing arena. The most traditional approaches largely depend on pre-processing and feature extraction techniques. This paper factually represents the implementation and evaluation of learning algorithms like LSTM and different CNNs for recognition and predicting emotions of 2D facial expressions based on recognition rate, learning time as well as effect of unbalanced datasets. The proposed system has targeted two datasets CK+ and JAFFE for two different techniques, where LSTM includes histogram equalisation and LBP as pre-processing and feature extraction techniques respectively. A transfer learning technique is explored using Dense Net and VGG-19 algorithm. The recognition rate with Dense Net was 96.64% and 97.45% respectively on the CK+ and JAFFE datasets. LSTM also showed 98.43% of recognition rate on JAFFE where as 72.63% on CK+ dataset.

Downloads

References

M. Altamura et al., “Facial emotion recognition in bipolar disorder and healthy aging,” J. Nerv. Ment. Dis., vol. 204, no. 3, pp. 188–193, 2016, doi: 10.1097/NMD.0000000000000453.

L. Ricciardi et al., “Facial emotion recognition and expression in Parkinson’s disease: An emotional mirror mechanism?,” PLoS One, vol. 12, no. 1, pp. 1–16, 2017, doi: 10.1371/journal.pone.0169110.

M. B. Harms, A. Martin, and G. L. Wallace, “Facial emotion recognition in autism spectrum disorders: A review of behavioral and neuroimaging studies,” Neuropsychol. Rev., vol. 20, no. 3, pp. 290–322, 2010, doi: 10.1007/s11065-010-9138-6.

C. F. Benitez-Quiroz, R. B. Wilbur, and A. M. Martinez, “The not face: A grammaticalization of facial expressions of emotion,” Cognition, vol. 150, pp. 77–84, May 2016, doi: 10.1016/j.cognition.2016.02.004.

P. Ekman, “Universal facial expressions of emotion,” California Mental Health, vol. 8, no. 4. pp. 151–158, 1970.

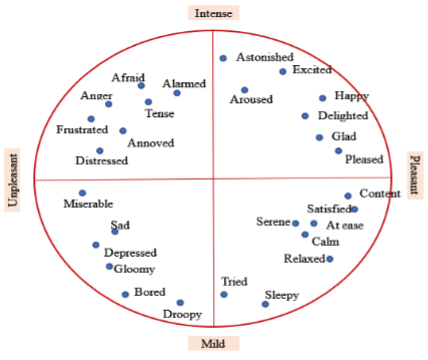

J. A. Russell, “A circumplex model of affect,” J. Pers. Soc. Psychol., vol. 39, no. 6, pp. 1161–1178, 1980, doi: 10.1037/h0077714.

Z. Lou, F. Alnajar, J. M. Alvarez, N. Hu, and T. Gevers, “Expression-Invariant Age Estimation Using Structured Learning,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 40, no. 2, pp. 365–375, Feb. 2018, doi: 10.1109/TPAMI.2017.2679739.

M. Shreve, “Automatic Macro- and Micro-Facial Expression Spotting and Applications,” Grad. Theses Diss., no. January, 2013.

J. Zhou, Y. Wang Zhenan Sun, Y. Xu Linlin Shen, J. Feng Shiguang Shan, Y. Qiao Zhenhua Guo, and S. Yu, “Biometric Recognition.” Online. . Available: http://www.springer.com/series/7412.

Sarma, C. A. ., S. . Inthiyaz, B. T. P. . Madhav, and P. S. . Lakshmi. “An Inter Digital- Poison Ivy Leaf Shaped Filtenna With Multiple Defects in Ground for S-Band Bandwidth Applications”. International Journal on Recent and Innovation Trends in Computing and Communication, vol. 10, no. 8, Aug. 2022, pp. 55-66, doi:10.17762/ijritcc.v10i8.5668.

C. Zhang and Z. Zhang, “Improving Multiview Face Detection with Multi-Task Deep Convolutional Neural Networks.”

W. M. Fang and P. Aarabi, “Robust real-time audiovisual face detection,” Multisensor, Multisource Inf. Fusion Archit. Algorithms, Appl. 2004, vol. 5434, p. 411, 2004, doi: 10.1117/12.545934.

J. Nicolle, V. Rapp, K. Bailly, L. Prevost, and M. Chetouani, “Robust continuous prediction of human emotions using multiscale dynamic cues,” in ICMI’12 - Proceedings of the ACM International Conference on Multimodal Interaction, 2012, pp. 501–508, doi: 10.1145/2388676.2388783.

S. K. A. Kamarol, M. H. Jaward, J. Parkkinen, and R. Parthiban, “Spatiotemporal feature extraction for facial expression recognition,” IET Image Process., vol. 10, no. 7, pp. 534–541, Jul. 2016, doi: 10.1049/iet-ipr.2015.0519.

J. M. Saragih, S. Lucey, and J. F. Cohn, “Face alignment through subspace constrained mean-shifts,” Proc. IEEE Int. Conf. Comput. Vis., no. Clm, pp. 1034–1041, 2009, doi: 10.1109/ICCV.2009.5459377.

X. Xiong and F. De La Torre, “Supervised descent method and its applications to face alignment,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., pp. 532–539, 2013, doi: 10.1109/CVPR.2013.75.

L. Zhang and D. Tjondronegoro, “Facial expression recognition using facial movement features,” IEEE Trans. Affect. Comput., vol. 2, no. 4, pp. 219–229, 2011, doi: 10.1109/T-AFFC.2011.13.

X. Ding, W. S. Chu, F. D. La Torre, J. F. Cohn, and Q. Wang, “Facial action unit event detection by cascade of tasks,” Proc. IEEE Int. Conf. Comput. Vis., pp. 2400–2407, 2013, doi: 10.1109/ICCV.2013.298.

A. C. Cruz, B. Bhanu, and N. S. Thakoor, “Vision and attention theory based sampling for continuous facial emotion recognition,” IEEE Trans. Affect. Comput., vol. 5, no. 4, pp. 418–431, Oct. 2014, doi: 10.1109/TAFFC.2014.2316151.

O. Çeliktutan, S. Ulukaya, and B. Sankur, “A comparative study of face landmarking techniques,” Eurasip J. Image Video Process., vol. 2013, no. 1, pp. 1–27, 2013, doi: 10.1186/1687-5281-2013-13.

M. Yang and L. Zhang, “Gabor feature based sparse representation for face recognition with Gabor occlusion dictionary,” Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 6316 LNCS, no. PART 6, pp. 448–461, 2010, doi: 10.1007/978-3-642-15567-3_33.

Y. li Tian, T. Kanade, and J. F. Cohn, “Recognizing upper face action units for facial expression analysis,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., vol. 1, no. 2, pp. 294–301, 2000, doi: 10.1109/cvpr.2000.855832.

C. Science, A. Yinka, A. Ali, D. A. R, and A. Jemai, “Information Security June 2011 International Journal of Computer Science & Information Security,” no. June, 2011.

W. S. Chu, F. De La Torre, and J. F. Cohn, “Selective transfer machine for personalized facial expression analysis,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 39, no. 3, pp. 529–545, Mar. 2017, doi: 10.1109/TPAMI.2016.2547397.

M. Liu, S. Shan, R. Wang, and X. Chen, “Learning expressionlets on spatio-temporal manifold for dynamic facial expression recognition,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Sep. 2014, pp. 1749–1756, doi: 10.1109/CVPR.2014.226.

S. Hizlisoy, S. Yildirim, and Z. Tufekci, “Music emotion recognition using convolutional long short term memory deep neural networks,” Eng. Sci. Technol. an Int. J., vol. 24, no. 3, pp. 760–767, 2021, doi: 10.1016/j.jestch.2020.10.009.

A. J. Holden et al., “Reducing the Dimensionality of,” vol. 313, no. July, pp. 504–507, 2006.

X. Han and Q. Du, “Research on Face Recognition Based on Deep Learning.”

T. F. Gonzalez, “Handbook of approximation algorithms and metaheuristics,” Handb. Approx. Algorithms Metaheuristics, pp. 1–1432, 2007, doi: 10.1201/9781420010749.

K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” 3rd Int. Conf. Learn. Represent. ICLR 2015 - Conf. Track Proc., pp. 1–14, 2015.

S. Rani and P. Kumar, “Deep Learning Based Sentiment Analysis Using Convolution Neural Network,” Arab. J. Sci. Eng., vol. 44, no. 4, pp. 3305–3314, 2019, doi: 10.1007/s13369-018-3500-z.

Z. Yu and C. Zhang, “Image based static facial expression recognition with multiple deep network learning,” in ICMI 2015 - Proceedings of the 2015 ACM International Conference on Multimodal Interaction, Nov. 2015, pp. 435–442, doi: 10.1145/2818346.2830595.

A. Fernández-Caballero et al., “Smart environment architecture for emotion detection and regulation,” J. Biomed. Inform., vol. 64, pp. 55–73, Dec. 2016, doi: 10.1016/j.jbi.2016.09.015.

K. Clawson, L. S. Delicato, and C. Bowerman, “Human centric facial expression recognition,” Proc. 32nd Int. BCS Hum. Comput. Interact. Conf. HCI 2018, pp. 1–12, 2018, doi: 10.14236/ewic/HCI2018.44.

André Sanches Fonseca Sobrinho. (2020). An Embedded Systems Remote Course. Journal of Online Engineering Education, 11(2), 01–07. Retrieved from http://onlineengineeringeducation.com/index.php/joee/article/view/39

S. Nigam, R. Singh, and A. K. Misra, “Efficient facial expression recognition using histogram of oriented gradients in wavelet domain,” Multimed. Tools Appl., vol. 77, no. 21, pp. 28725–28747, 2018, doi: 10.1007/s11042-018-6040-3.

Institute of Electrical and Electronics Engineers and IEEE Signal Processing Society, 2013 IEEE International Conference on Image Processing : ICIP 2013 : proceedings : September 15-18, 2013, Melbourne, Victoria, Australia. .

H. H. Tsai and Y. C. Chang, “Facial expression recognition using a combination of multiple facial features and support vector machine,” Soft Comput., vol. 22, no. 13, pp. 4389–4405, Jul. 2018, doi: 10.1007/s00500-017-2634-3.

Q. Wu, X. Shen, and X. Fu, “The machine knows what you are hiding: An automatic micro-expression recognition system,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 2011, vol. 6975 LNCS, no. PART 2, pp. 152–162, doi: 10.1007/978-3-642-24571-8_16.

A. T. Lopes, E. de Aguiar, A. F. De Souza, and T. Oliveira-Santos, “Facial expression recognition with Convolutional Neural Networks: Coping with few data and the training sample order,” Pattern Recognit., vol. 61, pp. 610–628, 2017, doi: 10.1016/j.patcog.2016.07.026.

L. Bacon et al., Proceedings, 2017 15th IEEE/ACIS International Conference on Software Engineering Research, Management and Applications (SERA) : June 7-9, 2017, the University of Greenwich, London, UK. .

H. W. Ng, V. D. Nguyen, V. Vonikakis, and S. Winkler, “Deep learning for emotion recognition on small datasets using transfer learning,” in ICMI 2015 - Proceedings of the 2015 ACM International Conference on Multimodal Interaction, Nov. 2015, pp. 443–449, doi: 10.1145/2818346.2830593.

C. C. Atabansi, T. Chen, R. Cao, and X. Xu, “Transfer Learning Technique with VGG-16 for Near-Infrared Facial Expression Recognition,” J. Phys. Conf. Ser., vol. 1873, no. 1, 2021, doi: 10.1088/1742-6596/1873/1/012033.

L. D. Nguyen, D. Lin, Z. Lin, and J. Cao, “Deep CNNs for microscopic image classification by exploiting transfer learning and feature concatenation,” Proc. - IEEE Int. Symp. Circuits Syst., vol. 2018-May, no. June, 2018, doi: 10.1109/ISCAS.2018.8351550.

O. Russakovsky et al., “ImageNet Large Scale Visual Recognition Challenge,” Int. J. Comput. Vis., vol. 115, no. 3, pp. 211–252, 2015, doi: 10.1007/s11263-015-0816-y.

A. Alsayat, “Improving Sentiment Analysis for Social Media Applications Using an Ensemble Deep Learning Language Model,” Arab. J. Sci. Eng., 2021, doi: 10.1007/s13369-021-06227-w.

K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., vol. 2016-Decem, pp. 770–778, 2016, doi: 10.1109/CVPR.2016.90.

Garg, D. K. . (2022). Understanding the Purpose of Object Detection, Models to Detect Objects, Application Use and Benefits. International Journal on Future Revolution in Computer Science &Amp; Communication Engineering, 8(2), 01–04. https://doi.org/10.17762/ijfrcsce.v8i2.206646. W. Wang, Y. Li, T. Zou, X. Wang, J. You, and Y. Luo, “A novel image classification approach via dense-mobilenet models,” Mob. Inf. Syst., vol. 2020, 2020, doi: 10.1155/2020/7602384.

Dursun, M., & Goker, N. (2022). Evaluation of Project Management Methodologies Success Factors Using Fuzzy Cognitive Map Method: Waterfall, Agile, And Lean Six Sigma Cases. International Journal of Intelligent Systems and Applications in Engineering, 10(1), 35–43. https://doi.org/10.18201/ijisae.2022.265

P. Lucey et al., “IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops,” IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. Work., vol. 4, no. July, pp. 94–101, 2003.

M. Kamachi, M. Lyons, and J. Gyoba, “The japanese female facial expression (jaffe) database,” URL http//www. kasrl. org/jaffe. html, vol. 21, no. January, p. 32, 1998.

M. Bartlett, J. Hager, P. Ekman, T. S.- Psychophysiology, and U. 1999, “Bartlett et al._1999_Psychophysiology_Measuring facial expressions by computer image analysis.pdf,” Cambridge.Org. Online. . Available: https://www.cambridge.org/core/journals/psychophysiology/article/measuring-facial-expressions-by-computer-image-analysis/B5A746609CF2198C688A0F28114CDF60.

P. S. P. Wang, “Performance comparisons of facial expression recognition in jaffe database,” vol. 22, no. 3, pp. 445–459, 2008.

M. O. Dwairi, Z. Alqadi, and R. A. Zneit, “Optimized True-Color Image Processing Optimized True-Color Image Processing,” no. August 2016, 2010.

M. S. Bartlett, G. Littlewort, M. Frank, C. Lainscsek, I. Fasel, and J. Movellan, “2005. Bartlett et al - REcognizing Facial expression - machine learning and application.”

C. Shan, S. Gong, and P. W. McOwan, “Facial expression recognition based on Local Binary Patterns: A comprehensive study,” Image Vis. Comput., vol. 27, no. 6, pp. 803–816, 2009, doi: 10.1016/j.imavis.2008.08.005.

T. Jabid, M. H. Kabir, and O. Chae, “Robust facial expression recognition based on local directional pattern,” ETRI J., vol. 32, no. 5, pp. 784–794, 2010, doi: 10.4218/etrij.10.1510.0132.

S. Tivatansakul, M. Ohkura, S. Puangpontip, and T. Achalakul, “Emotional healthcare system: Emotion detection by facial expressions using Japanese database,” 2014 6th Comput. Sci. Electron. Eng. Conf. CEEC 2014 - Conf. Proc., pp. 41–46, 2014, doi: 10.1109/CEEC.2014.6958552.

S. TIVATANSAKUL and M. OHKURA, “Healthcare System Focusing on Emotional Aspect using Augmented Reality,” Trans. Japan Soc. Kansei Eng., vol. 13, no. 1, pp. 191–201, 2014, doi: 10.5057/jjske.13.191.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.