MHA_VGG19: Multi-Head Attention with VGG19 Backbone Classifier-based Face Recognition for Real-Time Security Applications

Keywords:

Classification, face recognition, feature extraction, gamma correction, neural networksAbstract

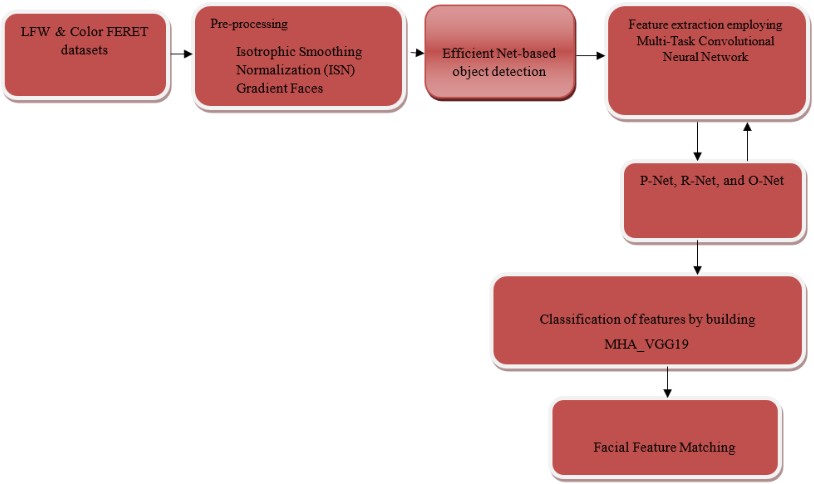

Face recognition remains a general biometric verification approach employed for assessing the face images and excerpting beneficial identification data out of them that is consistently named as a feature vector, which is employed for differentiating the biological features. The face recognition procedure starts with excerpting the coordinates of features like mouth’s width, eyes’ width, pupil, and correlating these with a saved face template. The objective of the proffered scheme remains to craft an independent security system, which executes face recognition-based surveillance alongside a hardware mechanism for locking up the protected area. Surveillance camera photographs of people tend to be of Low Resolution (LR), making it difficult to match them with High Resolution (HR) images. Super resolution, linked mappings, multidimensional scales, and convolutional neural networks are only moderately effective in practice.This study proffers Multi-Head Attention with VGG19 Backbone (MHA_VGG19) that is trained by face images of 3 remarkably disparate resolutions that are employed for excerpting distinctive features strong to the resolution alteration. This as well gives a quantization of the image specimens into a topological region in which inputs, which remain close in the original region as well as remain close in the output region; consequently, they give size decrement and invariability to petty alterations in the image specimen. The proffered methodology is widely analyzed employing LFW and Color FERET datasets by correlating with the advanced methodologies concerning different criteria. Subsequently, the proffered MHA_VGG19 attains 98.69% of accuracy, 99.06% of precision, 98.51% of recall, 98.75% of F1-score, and 100% of ROC for colour FERET database. By employing the LFW database, the proffered MHA_VGG19 attains 95.96% of accuracy, 96.41% of precision, 95.73% of recall, 96% of F1-score, and 99.83% of ROC.

Downloads

References

Y. Sun, X.Wang, and X. Tang, “Deeply learned face representations are sparse, selective, and robust,” Proc. IEEE Conf. on Comput. Vision and Pattern Recognition (CVPR), pp. 2892-2900, 2015.

F. Schroff, D. Kalenichenko, and J. Philbin, “Facenet, A unified embedding for face recognition and clustering,” Proc. IEEE Conf. Comput. Vision and Pattern Recognition, pp. 815-823, 2015.

O.M. Parkhi, A. Vedaldi, and A. Zisserman, “Deep face recognition,” Proc. British Mach. Vision Conf., vol. 1, no. 3, p. 6, 2015.

F. Matta and J.-L. Dugelay, “Person recognition using facial video information: A state of the art,” Journal of Visual Languages & Computing, vol. 20, no. 3, pp. 180–187, 2009.

S. Bashbaghi, E. Granger, R. Sabourin, and G.-A. Bilodeau, “Dynamic ensembles of exemplar-svms for still-to-video face recognition,” Pattern recognition, vol. 69, pp. 61–81, 2017.

W. Zhao, A. Krishnaswamy, R. Chellappa, D. L. Swets, and J. Weng, “Discriminant analysis of principal components for face recognition,” in Face Recognition. Springer, 1998, pp. 73–85.

J. A. Cook, V. Chandran, and C. B. Fookes, “3d face recognition using log-gabor templates,” 2006.

J.-S. Pierrard and T. Vetter, “Skin detail analysis for face recognition,” in 2007 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2007, pp. 1–8.

D. A. Socolinsky, A. Selinger, and J. D. Neuheisel, “Face recognition with visible and thermal infrared imagery,” Computer vision and image understanding, vol. 91, no. 1-2, pp. 72–114, 2003.

D. Maturana, D. Mery, and A. Soto, “Learning Discriminative Local Binary Patterns for Face Recognition,” Proc. IEEE Int’l Conf. Automatic Face and Gesture Recognition and Workshops (FG), pp. 470-475, March, 2011.

Krishnaveni, S. ., A. . Lakkireddy, S. . Vasavi, and A. . Gokhale. “Multi-Objective Virtual Machine Placement Using Order Exchange and Migration Ant Colony System Algorithm”. International Journal on Recent and Innovation Trends in Computing and Communication, vol. 10, no. 6, June 2022, pp. 01-09, doi:10.17762/ijritcc.v10i6.5618.

N.-S. Vu, and A. Caplier, “Enhanced Patterns of Oriented Edge Magnitudes for Face Recognition and Image Matching,” IEEE Trans. Image Processing, vol. 21, no. 3, pp. 1352-1365, March, 2012

Y. Taigman, M. Yang, M. Ranzato, and L. Wolf, “Deepface: Closing the gap to human-level performance in face verification,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2014, pp. 1701–1708.

J. Hu, J. Lu, and Y.-P. Tan, “Discriminative deep metric learning for face verification in the wild,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2014, pp. 1875–1882

Chaudhary, D. S. . (2022). Analysis of Concept of Big Data Process, Strategies, Adoption and Implementation. International Journal on Future Revolution in Computer Science &Amp; Communication Engineering, 8(1), 05–08. https://doi.org/10.17762/ijfrcsce.v8i1.2065

Ding, C., & Tao, D. (2017). Trunk-branch ensemble convolutional neural networks for video-based face recognition. IEEE transactions on pattern analysis and machine intelligence, 40(4), 1002-1014.

Choi, J. Y., & Lee, B. (2019). Ensemble of deep convolutional neural networks with Gabor face representations for face recognition. IEEE Transactions on Image Processing, 29, 3270-3281.

Abuzneid, M. A., & Mahmood, A. (2018). Enhanced human face recognition using LBPH descriptor, multi-KNN, and back-propagation neural network. IEEE access, 6, 20641-20651.

André Sanches Fonseca Sobrinho. (2020). An Embedded Systems Remote Course. Journal of Online Engineering Education, 11(2), 01–07. Retrieved from http://onlineengineeringeducation.com/index.php/joee/article/view/39

Oloyede, M. O., Hancke, G. P., & Myburgh, H. C. (2018). Improving face recognition systems using a new image enhancement technique, hybrid features and the convolutional neural network. Ieee Access, 6, 75181-75191.

Kiran, M. S., & Yunusova, P. (2022). Tree-Seed Programming for Modelling of Turkey Electricity Energy Demand. International Journal of Intelligent Systems and Applications in Engineering, 10(1), 142–152. https://doi.org/10.18201/ijisae.2022.278

Sepas-Moghaddam, A., Etemad, A., Pereira, F., & Correia, P. L. (2021). Capsfield: Light field-based face and expression recognition in the wild using capsule routing. IEEE Transactions on Image Processing, 30, 2627-2642.

Yang, B., Cao, J., Ni, R., & Zhang, Y. (2017). Facial expression recognition using weighted mixture deep neural network based on double-channel facial images. IEEE Access, 6, 4630-4640.

Zhao, J., Xiong, L., Li, J., Xing, J., Yan, S., & Feng, J. (2018). 3d-aided dual-agent gans for unconstrained face recognition. IEEE transactions on pattern analysis and machine intelligence, 41(10), 2380-2394.

Zhang, Z., Chen, X., Wang, B., Hu, G., Zuo, W., & Hancock, E. R. (2018). Face frontalization using an appearance-flow-based convolutional neural network. IEEE Transactions on Image Processing, 28(5), 2187-2199.

Yin, X., & Liu, X. (2017). Multi-task convolutional neural network for pose-invariant face recognition. IEEE Transactions on Image Processing, 27(2), 964-975.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.