Multi-Class Human Activity Prediction using Deep Learning Algorithm

Keywords:

Human activity, EfficientNET, Pose estimation, TimeSformer, Optical flowAbstract

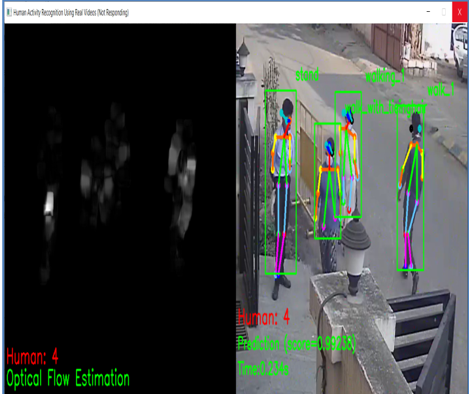

The most common technique for understanding human behavior is action recognition based on videos. Videos provide significantly more information than image-based action recognition. Reducing action ambiguity as well as several dataset-focused studies, innovative models, and learning strategies over the past ten years have elevated video action identification to a higher level. However, there are difficulties and unresolved issues, particularly in real-time CCTV analytics where data gathering and labeling are more complex, necessitating the annotation of data. Additionally, the actions could happen very quickly, making it challenging to distinguish between them. This paper presented a video based multiple human activity recognition for real-time CCTV camera videos. To propose a HAR to introduce an Enhanced TimeSformer Model with Multi-Layer Perceptron (ETMLP) Neural Networks classification algorithm applies self-attention over the patches and human regions. By interacting with uniform classification tokens in this manner and enhancing them with contextualized human activity data, visual human pose estimation areas are able to estimate human pose.

Downloads

References

K. Soomro, A. R. Zamir, and M. Shah, “Ucf101: A dataset of 101 human actions classes from videos in the wild,” arXiv preprint arXiv:1212.0402, 2012

H. Kuehne, H. Jhuang, E. Garrote, T. Poggio, and T. Serre, “Hmdb: a large video database for human motion recognition,” in 2011 International conference on computer vision. IEEE, 2011, pp. 2556–2563.

F. Caba Heilbron, V. Escorcia, B. Ghanem, and J. Carlos Niebles, “Activitynet: A large-scale video benchmark for human activity understanding,” in Proceedings of the ieee conference on computer vision and pattern recognition, 2015, pp. 961–970.

S. Abu-El-Haija, N. Kothari, J. Lee, P. Natsev, G. Toderici, B. Varadarajan, and S. Vijayanarasimhan, “Youtube-8m: A large-scale video classification benchmark,” 2016.

J. Carreira and A. Zisserman, “Quo vadis, action recognition? a new model and the kinetics dataset,” in proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 6299–6308.

C. Gu, C. Sun, D. A. Ross, C. Vondrick, C. Pantofaru, Y. Li, S. Vijayanarasimhan, G. Toderici, S. Ricco, R. Sukthankar et al., “Ava: A video dataset of spatio-temporally localized atomic visual actions,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 6047–6056.

R. Goyal, S. Ebrahimi Kahou, V. Michalski, J. Materzynska, S. Westphal, H. Kim, V. Haenel, I. Fruend, P. Yianilos, M. Mueller-Freitag et al., “The “something something" video database for learning and evaluating visual common sense,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 5842–5850.

A. Arnab, M. Dehghani, G. Heigold, C. Sun, M. Luˇci´c, and C. Schmid, “Vivit: A video vision transformer,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 6836–6846.

Y. Li, B. Ji, X. Shi, J. Zhang, B. Kang, and L. Wang, “Tea: Temporal excitation and aggregation for action recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 909–918.

W. Kay, J. Carreira, K. Simonyan, B. Zhang, C. Hillier, S. Vijayanarasimhan, F. Viola, T. Green, T. Back, P. Natsev et al., “The kinetics human action video dataset,” 2017.

Gedas Bertasius, Heng Wang, and Lorenzo Torresani. Is space-time attention all you need for video understanding? NeurIPS, 2021.

Anurag Arnab, Mostafa Dehghani, Georg Heigold, Chen Sun, Mario Lucic, and Cordelia Schmid. Vivit: A video vision transformer. ICCV, 2021.

Fan Haoqi, Xiong Bo, Mangalam Karttikeya, Li Yanghao, Yan Zhicheng, Malik Jitendra, and Feichtenhofer Christoph. Multiscale vision transformers, 2021.

Simon Ging, Mohammadreza Zolfaghari, Hamed Pirsiavash, and Thomas Brox. Coot: Cooperative hierarchical transformer for video-text representation learning. In NeurIPS, 2020.

Ranjay Krishna, Kenji Hata, Frederic Ren, Li Fei-Fei, and Juan Carlos Niebles. Dense-captioning events in videos. In CVPR, 2017

Linjie Li, Jie Lei, Zhe Gan, Licheng Yu, Yen-Chun Chen, Rohit Pillai, Yu Cheng, Luowei Zhou, Xin Eric Wang, William YangWang, et al. Value: A multi-task benchmark for video-and-language understanding evaluation. arXiv preprint arXiv:2106.04632, 2021.

Anurag Arnab, Mostafa Dehghani, Georg Heigold, Chen Sun, Mario Luˇci´c, and Cordelia Schmid. Vivit: A video vision transformer. ICCV, 2021.

Will Kay, Joao Carreira, Karen Simonyan, Brian Zhang, Chloe Hillier, Sudheendra Vijayanarasimhan, Fabio Viola, Tim Green, Trevor Back, Paul Natsev, et al. The kinetics human action video dataset. arXiv preprint arXiv:1705.06950, 2017

Ranjay Krishna, Kenji Hata, Frederic Ren, Li Fei-Fei, and Juan Carlos Niebles. Dense-captioning events in videos. In CVPR, 2017

Weijie Su, Xizhou Zhu, Yue Cao, Bin Li, Lewei Lu, Furu Wei, and Jifeng Dai. Vl-bert: Pre-training of generic visuallinguistic representations. ICLR, 2020

Girdhar, R., Carreira, J., Doersch, C., and Zisserman, A. Video action transformer network. In IEEE Conference on Computer Vision and Pattern Recognition, CVPR, 2019.

Feichtenhofer, C. X3d: Expanding architectures for efficient video recognition. CVPR, pp. 200–210, 2020.

Bertasius, G. and Torresani, L. Classifying, segmenting, and tracking object instances in video with mask propagation. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2020.

Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A., and Zagoruyko, S. End-to-end object detection with transformers. In European Conference Computer Vision (ECCV), 2020.

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., Uszkoreit, J., and Houlsby, N. An image is worth 16x16 words: Transformers for image recognition at scale. CoRR, 2020.

Sevilla-Lara, L., Zha, S., Yan, Z., Goswami, V., Feiszli, M., and Torresani, L. Only time can tell: 24 Discovering temporal data for temporal modeling. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), pp. 535–544, January 2021.

Jitha Janardhanan and S. Umamaheswari, "Vision based Human Activity Recognition using Deep Neural Network Framework", International Journal of Advanced Computer Science and Applications(IJACSA), Volume 13 Issue 6, 2022.

Yu Zhao, Rennong Yang, Guillaume Chevalier, Ximeng Xu, and Zhenxing Zhang, “Deep Residual Bidir-LSTM for Human Activity Recognition Using Wearable Sensors”, Mathematical Problems in Engineering, ,Volume 2018.

Ba, L. J., Kiros, J. R., and Hinton, G. E. Layer normalization. CoRR, 2016.

Jitha Janardhanan and S. Umamaheswari, “Recognizing Multiple Human Activities Using Deep Learning Framework”, Revue d'Intelligence Artificielle,International Inforamtion and Engineering Technology Association (IIETA), ACCEPTED

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2022 Jitha Janardhanan, S. Umamaheswari

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.