Exploring the Generalization Capacity of Over-Parameterized Networks

Keywords:

Deep learning, over-parameterized deep neural networks, generalization, over-fitting, memorization, CIFAR-10, VGG, Manifold learning, PCAAbstract

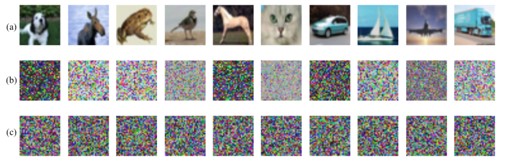

Most over-parameterized deep neural networks can generalize to true data. Even over-parameterized networks can learn randomly shuffled bytes in true data, which is far better than just random guessing. In such a scenario where, localized relations are lost to a huge extent, over-parameterized networks show generalization capability. In this paper, multiple models have been trained on various subsets and derivations of CIFAR10 data. Then the models have been trained on true images and true labels, randomly shuffled bytes, and true labels, ordered shuffled bytes and true labels, and randomly generated noise. Shuffling of bytes instead of pixels can distort the image to a great extent, yet over-parameterized models are able to generalize the data. After experimentation, it has been observed that generalization in true pixels is easier in comparison to randomly shuffled bytes data, and generalization in randomly shuffled bytes data is similar to ordered shuffled pixel data. However, memorization is easier and is not affected by any relationships in data.

Downloads

References

Zhang, Chiyuan & Bengio, Samy & Hardt, Moritz & Recht, Benjamin & Vinyals, Oriol. (2016). Understanding deep learning requires rethinking generalization. Communications of the ACM. 64. 10.1145/3446776.

Zhang, Chiyuan, Samy Bengio, Moritz Hardt, Benjamin Recht, and Oriol Vinyals. "Understanding deep learning (still) requires rethinking generalization." Communications of the ACM 64, no. 3 (2021): 107-115.

Allen-Zhu, Zeyuan, Yuanzhi Li, and Zhao Song. "A convergence theory for deep learning via over-parameterization." In International Conference on Machine Learning, pp. 242-252. PMLR, 2019.

Vapnik, Vladimir Naumovich. "Adaptive and learning systems for signal processing communications, and control." Statistical learning theory (1998).

Bartlett, Peter L., and Shahar Mendelson. "Rademacher and Gaussian complexities: Risk bounds and structural results." Journal of Machine Learning Research 3, no. Nov (2002): 463-482.

Mukherjee, Sayan, Partha Niyogi, Tomaso Poggio, and Ryan Rifkin. "Learning theory: stability is sufficient for generalization and necessary and sufficient for consistency of empirical risk minimization." Advances in Computational Mathematics 25, no. 1 (2006): 161-193.

Bousquet, Olivier, and André Elisseeff. "Stability and generalization." The Journal of Machine Learning Research 2 (2002): 499-526.

Poggio, Tomaso, Ryan Rifkin, Sayan Mukherjee, and Partha Niyogi. "General conditions for predictivity in learning theory." Nature 428, no. 6981 (2004): 419-422.

Wei, Colin, Jason Lee, Qiang Liu, and Tengyu Ma. "Regularization matters: Generalization and optimization of neural nets vs their induced kernel." (2019).

Delalleau, Olivier, and Yoshua Bengio. "Shallow vs. deep sum-product networks." Advances in neural information processing systems 24 (2011): 666-674.

Hornik, Kurt, Maxwell Stinchcombe, and Halbert White. "Multilayer feedforward networks are universal approximators." Neural networks 2, no. 5 (1989): 359-366.

Funahashi, Ken-Ichi. "On the approximate realization of continuous mappings by neural networks." Neural networks 2, no. 3 (1989): 183-192.

Barron, Andrew R. "Approximation and estimation bounds for artificial neural networks." Machine learning 14, no. 1 (1994): 115-133.

Wei, Colin, Jason Lee, Qiang Liu, and Tengyu Ma. "Regularization matters: Generalization and optimization of neural nets vs their induced kernel." (2019).

Allen-Zhu, Zeyuan, Yuanzhi Li, and Zhao Song. "A convergence theory for deep learning via over-parameterization." In International Conference on Machine Learning, pp. 242-252. PMLR, 2019.

Allen-Zhu, Zeyuan, Yuanzhi Li, and Yingyu Liang. "Learning and generalization in overparameterized neural networks, going beyond two layers." arXiv preprint arXiv:1811.04918 (2018).

Simonyan, Karen, and Andrew Zisserman. "Very deep convolutional networks for large-scale image recognition." arXiv preprint arXiv:1409.1556 (2014).

Mueller, A. (n.d.). Another look at MNIST. Https://Peekaboo-Vision. Retrieved August 1, 2021, from https://peekaboo-vision.blogspot.com/2012/12/another-look-at-mnist.html

Pedregosa, F., Grisel, O., Blondel, M., & Varoquaux, G. (n.d.). Manifold learning on handwritten digits: Locally Linear Embedding, Isomap. . .¶. Https://Scikit-Learn.Org/. Retrieved August 1, 2020, from https://scikit-learn.org/0.15/auto_examples/manifold/ plot_lle_digits.html#example-manifold-plot-lle-digits-py

H. Salehinejad, S. Valaee, T. Dowdell, E. Colak and J. Barfett, "Generalization of Deep Neural Networks for Chest Pathology Classification in X-Rays Using Generative Adversarial Networks," 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2018, pp. 990-994, doi: 10.1109/ICASSP.2018.8461430.

Sanjoy Dasgupta. 2000. Experiments with random projection. In Proceedings of the Sixteenth conference on Uncertainty in artificial intelligence (UAI’00), Craig Boutilier and Moisés Goldszmidt (Eds.). Morgan Kaufmann Publishers Inc., San Francisco, CA, USA, 143-151.

Ella Bingham and Heikki Mannila. 2001. Random projection in dimensionality reduction: applications to image and text data. In Proceedings of the seventh ACM SIGKDD international conference on Knowledge discovery and data mining (KDD ‘01). ACM, New York, NY, USA, 245-250.

Halko, N., Martinsson, P. G., & Tropp, J. A. (2011). Finding structure with randomness: Probabilistic algorithms for constructing approximate matrix decompositions. SIAM review, 53(2), 217-288.

Tenenbaum, J.B.; De Silva, V.; & Langford, J.C. A global geometric framework for nonlinear dimensionality reduction. Science 290 (5500)

“Nonlinear dimensionality reduction by locally linear embedding” Roweis, S. & Saul, L. Science 290:2323 (2000)

Borg, I., & Groenen, P. J. (2005). Modern multidimensional scaling: Theory and applications. Springer Science & Business Media.

Kruskal, J. B. (1964). Multidimensional scaling by optimizing goodness of fit to a nonmetric hypothesis. Psychometrika, 29(1), 1-27.

Ng, A., Jordan, M., & Weiss, Y. (2001). On spectral clustering: Analysis and an algorithm. Advances in neural information processing systems, 14.

Zhuzhunashvili, D., & Knyazev, A. (2017, September). Preconditioned spectral clustering for stochastic block partition streaming graph challenge (preliminary version at arxiv.). In 2017 IEEE High Performance Extreme Computing Conference (HPEC) (pp. 1-6). IEEE.

Meilă, M., & Shi, J. (2001, January). A random walks view of spectral segmentation. In International Workshop on Artificial Intelligence and Statistics (pp. 203-208). PMLR.

Shi, J., & Malik, J. (2000). Normalized cuts and image segmentation. IEEE Transactions on pattern analysis and machine intelligence, 22(8), 888-905.

Zhanxuan, Hu & Wu, Danyang & Nie, Feiping & Wang, Rong. (2021). Generalization Bottleneck in Deep Metric Learning. Information Sciences. 581. 10.1016/j.ins.2021.09.023.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.