Semantic Marginal Autoencoder Model for the Word Embedding Technique for the Marginal Denoising in the Different Languages

Keywords:

Semantics, Word Embedding, Marginal Estimation, Neighbourhood Estimation, AccuracyAbstract

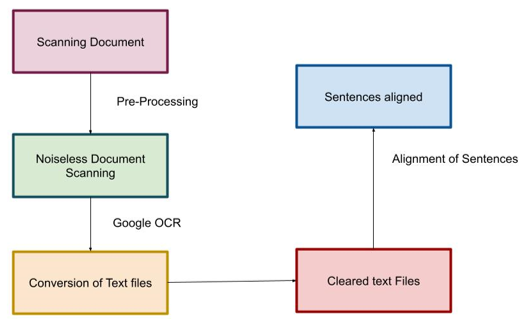

The words are comprised of the smaller elements for the practical evaluation of the languages for the election of effective sematic. The conventional semantic technique subjected to the challenges associated with the incorporation of the different feature variables for the computation. However, the word embedding technique is complex due to the presence of the difference in the language features. This paper aimed to develop as an effective semantic model integrated with the Auto Encoder model. The proposed model is termed as Sematic Marginal Auto Encoder (SMarginalAE) for the different language sequences. The proposed model comprises of the Marginal features with the neighborhood estimation of the features. The proposed SMarginalAE achieves the neighborhood accuracy of 92.45% and the pair-wise accuracy is estimated as the 88.94%. The comparative analysis emphasised that the suggested SMarginalAE framework achieves the ~3% enhanced efficiency than the conventional techniques.

Downloads

References

Selva Birunda, S., & Kanniga Devi, R. (2021). A review on word embedding techniques for text classification. Innovative Data Communication Technologies and Application, 267-281.

Neelakandan, S., Arun, A., Bhukya, R. R., Hardas, B. M., Kumar, T., & Ashok, M. (2022). An automated word embedding with parameter tuned model for web crawling. Intelligent Automation & Soft Computing, 32(3), 1617-1632.

Roman, M., Shahid, A., Khan, S., Koubaa, A., & Yu, L. (2021). Citation intent classification using word embedding. Ieee Access, 9, 9982-9995.

Srinivasan, S., Ravi, V., Alazab, M., Ketha, S., Al-Zoubi, A. M., & Kotti Padannayil, S. (2021). Spam emails detection based on distributed word embedding with deep learning. In Machine intelligence and big data analytics for cybersecurity applications (pp. 161-189). Springer, Cham.

Verma, P. K., Agrawal, P., Amorim, I., & Prodan, R. (2021). WELFake: word embedding over linguistic features for fake news detection. IEEE Transactions on Computational Social Systems, 8(4), 881-893.

Singh, K. N., Devi, S. D., Devi, H. M., & Mahanta, A. K. (2022). A novel approach for dimension reduction using word embedding: An enhanced text classification approach. International Journal of Information Management Data Insights, 2(1), 100061.

Habib, M., Faris, M., Alomari, A., & Faris, H. (2021). AltibbiVec: A Word Embedding Model for Medical and Health Applications in the Arabic Language. IEEE Access, 9, 133875-133888.

Li, S., Pan, R., Luo, H., Liu, X., & Zhao, G. (2021). Adaptive cross-contextual word embedding for word polysemy with unsupervised topic modeling. Knowledge-Based Systems, 218, 106827.

Rani, R., & Lobiyal, D. K. (2021). A weighted word embedding based approach for extractive text summarization. Expert Systems with Applications, 186, 115867.

Fesseha, A., Xiong, S., Emiru, E. D., Diallo, M., & Dahou, A. (2021). Text classification based on convolutional neural networks and word embedding for low-resource languages: Tigrinya. Information, 12(2), 52.

Kumhar, S. H., Kirmani, M. M., Sheetlani, J., & Hassan, M. (2021). Word embedding generation for Urdu language using Word2vec model. Materials Today: Proceedings.

Wu, F., Yang, R., Zhang, C., & Zhang, L. (2021). A deep learning framework combined with word embedding to identify DNA replication origins. Scientific reports, 11(1), 1-19.

Du, Y., Fang, Q., & Nguyen, D. (2021). Assessing the reliability of word embedding gender bias measures. arXiv preprint arXiv:2109.04732.

Zuo, Y., Li, C., Lin, H., & Wu, J. (2021). Topic modeling of short texts: A pseudo-document view with word embedding enhancement. IEEE Transactions on Knowledge and Data Engineering.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 Deepak Kumar, L. Vertivendan, K. Velmurugan, Kumarasamy M., Dhanashree Toradmalle, Khan Vajid Nabilal

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.