Indonesian News Classification Using IndoBert

Keywords:

Natural Language Processing, News Classification, IndoBERT, Multiclass ClassificationAbstract

In 2021 there was an increase in the number of people who have internet access, and the number of users increased from 175 million users to 202 million users. News classification in general still uses traditional techniques such as word embedding with TF-IDF and machine learning. The latest development for technology that can classify NLP news using the BERT model is a state-of-the-art pre-trained model. However, the pre-train model of BERT is only limited to use in English. So in this study, IndoBERT will be used in making news recommendations based on the category. This dataset uses an Indonesian news dataset that has 5 categories, including football, news, business, technology, and automotive. The IndoBERT method will be compared with other pre-train models, such as XLNET, BERT multilingual, XLMRoberta. Meanwhile, the machine learning method with TF-IDF word embedding was compared using the XGBoost method, LGB, and random forest. In this study, we see that the classification method using IndoBERT gives the best results with an accuracy value of 94% and also provides the smallest computation time compared to other methods with a time of one minute 56 seconds and a validation time of 10 seconds. BERT can give the best results because BERT is a type of pre-trained model that is trained from various kinds of Indonesian words such as news and several website sources to add to the corpus of vocabulary sources in the model. In the future research will be carried out to implement the dual IndoBERT model and the Siamese IndoBERT.

Downloads

References

A. T. Haryanto, “Riset: Ada 175,4 Juta Pengguna Internet di Indonesia,” https://inet.detik.com/, 2020. https://inet.detik.com/cyberlife/d-4907674/riset-ada-1752-juta-pengguna-internet-di-indonesia.

P. Agustini, “Warganet Meningkat, Indonesia Perlu Tingkatkan Nilai Budaya di Internet,” aptika.kominfo.go.id, 2021. https://aptika.kominfo.go.id/2021/09/warganet-meningkat-indonesia-perlu-tingkatkan-nilai-budaya-di-internet/ (accessed Sep. 12, 2021).

A. Watson, “Average circulation of the Wall Street Journal from 2018 to 2020,” www.statista.com, 2020. https://www.statista.com/statistics/193788/average-paid-circulation-of-the-wall-street-journal/ (accessed Jul. 07, 2021).

J. P. Haumahu, S. D. H. Permana, and Y. Yaddarabullah, “Fake news classification for Indonesian news using Extreme Gradient Boosting (XGBoost),” IOP Conf. Ser. Mater. Sci. Eng., vol. 1098, no. 5, p. 052081, 2021, doi: 10.1088/1757-899x/1098/5/052081.

S. Y. J. Prasetyo, Y. B. Christianto, and K. D. Hartomo, “Analisis Data Citra Landsat 8 OLI Sebagai Indeks Prediksi Kekeringan Menggunakan Machine Learning di Wilayah Kabupaten Boyolali dan Purworejo,” Indones. J. Model. Comput., vol. 2, no. 2, pp. 25–36, 2019, [Online]. Available: https://ejournal.uksw.edu/icm/article/view/2954.

D. A. McCarty, H. W. Kim, and H. K. Lee, “Evaluation of light gradient boosted machine learning technique in large scale land use and land cover classification,” Environ. - MDPI, vol. 7, no. 10, pp. 1–22, 2020, doi: 10.3390/environments7100084.

A. K. Singh and M. Shashi, “Vectorization of text documents for identifying unifiable news articles,” Int. J. Adv. Comput. Sci. Appl., vol. 10, no. 7, pp. 305–310, 2019, doi: 10.14569/ijacsa.2019.0100742.

S. González-Carvajal and E. C. Garrido-Merchán, “Comparing BERT against traditional machine learning text classification,” no. Ml, 2020, [Online]. Available: http://arxiv.org/abs/2005.13012.

K. S. Nugroho, A. Y. Sukmadewa, and N. Yudistira, “Large-Scale News Classification using BERT Language Model: Spark NLP Approach,” 2021, [Online]. Available: http://arxiv.org/abs/2107.06785.

K. Thandar Nwet, “Machine Learning Algorithms for Myanmar News Classification,” Int. J. Nat. Lang. Comput., vol. 8, no. 4, pp. 17–24, 2019, doi: 10.5121/ijnlc.2019.8402.

S. MAHAJAN, “News Classification Using Machine Learning,” Int. J. Recent Innov. Trends Comput. Commun., vol. 9, no. 5, pp. 23–27, 2021, doi: 10.17762/ijritcc.v9i5.5464.

K. Shah, H. Patel, D. Sanghvi, and M. Shah, “A Comparative Analysis of Logistic Regression, Random Forest and KNN Models for the Text Classification,” Augment. Hum. Res., vol. 5, no. 1, 2020, doi: 10.1007/s41133-020-00032-0.

W. K. Sari, D. P. Rini, R. F. Malik, and I. S. B. Azhar, “Klasifikasi Teks Multilabel pada Artikel Berita Menggunakan Long Short- Term Memory dengan Word2Vec,” Resti, vol. 1, no. 10, pp. 276–285, 2017.

[C. Li, G. Zhan, and Z. Li, “News Text Classification Based on Improved Bi-LSTM-CNN,” Proc. - 9th Int. Conf. Inf. Technol. Med. Educ. ITME 2018, pp. 890–893, 2018, doi: 10.1109/ITME.2018.00199.

B. Büyüköz, A. Hürriyetoğlu, and A. Özgür, “Analyzing ELMo and DistilBERT on Socio-political News Classification,” Proc. Work. Autom. Extr. Socio-political Events from News 2020, no. May, pp. 9–18, 2020, [Online]. Available: https://www.aclweb.org/anthology/2020.aespen-1.4.

A. Chandra, “Indonesian News Dataset,” https://github.com/, 2020. https://github.com/andreaschandra/indonesian-news.

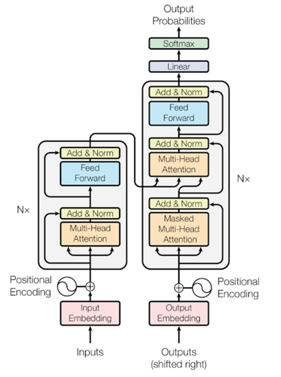

J. Devlin, M. W. Chang, K. Lee, and K. Toutanova, “BERT: Pre-training of deep bidirectional transformers for language understanding,” NAACL HLT 2019 - 2019 Conf. North Am. Chapter Assoc. Comput. Linguist. Hum. Lang. Technol. - Proc. Conf., vol. 1, no. Mlm, pp. 4171–4186, 2019.

[F. Koto, A. Rahimi, J. H. Lau, and T. Baldwin, “IndoLEM and IndoBERT: A Benchmark Dataset and Pre-trained Language Model for Indonesian NLP,” pp. 757–770, 2021, doi: 10.18653/v1/2020.coling-main.66.

Z. Yang, Z. Dai, Y. Yang, J. Carbonell, R. Salakhutdinov, and Q. V. Le, “XLNet: Generalized autoregressive pretraining for language understanding,” Adv. Neural Inf. Process. Syst., vol. 32, no. NeurIPS, pp. 1–11, 2019.

[A. Conneau and G. Lample, “Cross-lingual language model pretraining,” Adv. Neural Inf. Process. Syst., vol. 32, 2019.

J. Libovický, R. Rosa, and A. Fraser, “On the Language Neutrality of Pre-trained Multilingual Representations,” pp. 1663–1674, 2020, doi: 10.18653/v1/2020.findings-emnlp.150.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.