Multi-level Image Enhancement for Text Recognition System using Hybrid Filters

Keywords:

Image Enhancement, Text Recognition System, Gurmukhi, Typewritten Text, FiltersAbstract

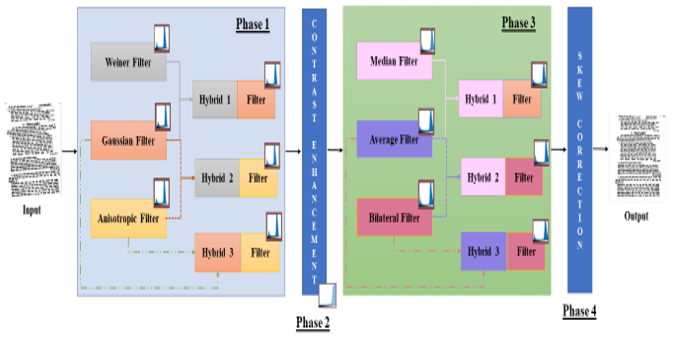

OCR, document scanning, and other uses for the image-based text recognition technology are common. However, the accuracy of recognition is greatly influenced by the quality of the image that was used to capture it. other environmental elements, including as illumination, camera motion, and other sounds, might damage the acquired image. The image quality must be improved as a result before being input into the recognition system. In this research, a multi-level hybrid filter-based image enhancement method is proposed to increase the image quality. The effectiveness of the proposed strategy is done using various indicators, including PSNR, MSE, SC, and NAE. The outcomes show how the suggested method is efficient at enhancing the quality of the acquired image, which can greatly improve the performance of the text recognition system.

Downloads

References

R. Mittal and A. Garg, “Text extraction using OCR: A Systematic Review,” in 2020 Second International Conference on Inventive Research in Computing Applications (ICIRCA), Jul. 2020, pp. 357–362. doi: 10.1109/ICIRCA48905.2020.9183326.

M. Elo, T. Kothari, and M. Ivanova-Gongne, “Language Diversity – Multi-Ethnic Migrant and Diaspora Resources for International Business and Entrepreneurship,” in Diversity in Action, Emerald Publishing Limited, 2022, pp. 123–150. doi: 10.1108/978-1-80117-226-420221008.

S. B. Das, D. Panda, T. K. Mishra, and B. K. Patra, “Statistical Machine Translation for Indic Languages,” Jan. 2023, [Online]. Available: http://arxiv.org/abs/2301.00539

B. P. Bergeron, “Optical character recognition,” Postgrad. Med., vol. 103, no. 3, pp. 37–39, Mar. 1998, doi: 10.3810/pgm.1998.03.410.

J. Liang, D. Doermann, and H. Li, “Camera-based analysis of text and documents: a survey,” Int. J. Doc. Anal. Recognit., vol. 7, no. 2–3, pp. 84–104, Jul. 2005, doi: 10.1007/s10032-004-0138-z.

S. K. Singh and A. Chaturvedi, “Leveraging deep feature learning for wearable sensors based handwritten character recognition,” Biomed. Signal Process. Control, vol. 80, p. 104198, Feb. 2023, doi: 10.1016/j.bspc.2022.104198.

M. Antony Robert Raj, S. Abirami, and S. M. Shyni, “Tamil Handwritten Character Recognition System using Statistical Algorithmic Approaches,” Comput. Speech Lang., vol. 78, p. 101448, Mar. 2023, doi: 10.1016/j.csl.2022.101448.

S. Khamekhem Jemni, M. A. Souibgui, Y. Kessentini, and A. Fornés, “Enhance to read better: A Multi-Task Adversarial Network for Handwritten Document Image Enhancement,” Pattern Recognit., vol. 123, p. 108370, Mar. 2022, doi: 10.1016/j.patcog.2021.108370.

S. Abbas et al., “Convolutional Neural Network Based Intelligent Handwritten Document Recognition,” Comput. Mater. Contin., vol. 70, no. 3, pp. 4563–4581, 2022, doi: 10.32604/cmc.2022.021102.

M. Aslam, “Removal of the Noise & Blurriness using Global & Local Image Enhancement Equalization Techniques,” Int. J. Comput. Innov. Sci., vol. 1, no. 1, pp. 1–11, 2022.

A. Khmag, “Additive Gaussian noise removal based on generative adversarial network model and semi-soft thresholding approach,” Multimed. Tools Appl., vol. 82, no. 5, pp. 7757–7777, Feb. 2023, doi: 10.1007/s11042-022-13569-6.

M. Hu, Y. Zhong, S. Xie, H. Lv, and Z. Lv, “Fuzzy System Based Medical Image Processing for Brain Disease Prediction,” Front. Neurosci., vol. 15, Jul. 2021, doi: 10.3389/fnins.2021.714318.

Z. A. Mustafa, B. A. Ibraheem, K. M. GissmAllah, R. E. Elmahdi, and A. I. Omara, “Noise Reduction for Magnetic Resonance Imaging by Using Edge Detection and Hybrid Mean Lee Filter Techniques,” J. Clin. Eng., vol. 48, no. 1, pp. 21–28, Jan. 2023, doi: 10.1097/JCE.0000000000000559.

B. B. Vimala et al., “Image Noise Removal in Ultrasound Breast Images Based on Hybrid Deep Learning Technique,” Sensors, vol. 23, no. 3, p. 1167, Jan. 2023, doi: 10.3390/s23031167.

A. K. Shukla, S. K. Dwivedi, G. Chandra, and R. Shree, “Deep Learning-Based Suppression of Speckle-Noise in Synthetic Aperture Radar (SAR) Images: A Comprehensive Review,” 2023, pp. 693–705. doi: 10.1007/978-981-19-2358-6_62.

D. Saravanan and S. V. Urkude, “Confiscate Boisterous from Color-Based Images Using Rule-Based Technique,” 2023, pp. 503–514. doi: 10.1007/978-981-19-2358-6_47.

A. Giacometti et al., “The value of critical destruction: Evaluating multispectral image processing methods for the analysis of primary historical texts,” Digit. Scholarsh. Humanit., p. fqv036, Oct. 2015, doi: 10.1093/llc/fqv036.

R. Dey, R. C. Balabantaray, S. Mohanty, D. Singh, M. Karuppiah, and D. Samanta, “Approach for Preprocessing in Offline Optical Character Recognition (OCR),” in 2022 Interdisciplinary Research in Technology and Management (IRTM), Feb. 2022, pp. 1–6. doi: 10.1109/IRTM54583.2022.9791698.

S. S. Bukhari, A. Kadi, M. A. Jouneh, F. M. Mir, and A. Dengel, “anyOCR: An Open-Source OCR System for Historical Archives,” in 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Nov. 2017, pp. 305–310. doi: 10.1109/ICDAR.2017.58.

Chew Lim Tan, Weihua Huang, Zhaohui Yu, and Yi Xu, “Imaged document text retrieval without OCR,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 24, no. 6, pp. 838–844, Jun. 2002, doi: 10.1109/TPAMI.2002.1008389.

A. Y. Felix, A. Jesudoss, and J. A. Mayan, “Entry and exit monitoring using license plate recognition,” in 2017 IEEE International Conference on Smart Technologies and Management for Computing, Communication, Controls, Energy and Materials (ICSTM), Aug. 2017, pp. 227–231. doi: 10.1109/ICSTM.2017.8089156.

B. Weiss, “Fast median and bilateral filtering,” in ACM SIGGRAPH 2006 Papers on - SIGGRAPH ’06, 2006, p. 519. doi: 10.1145/1179352.1141918.

S. Khairnar, S. D. Thepade, and S. Gite, “Effect of image binarization thresholds on breast cancer identification in mammography images using OTSU, Niblack, Burnsen, Thepade’s SBTC,” Intell. Syst. with Appl., vol. 10–11, p. 200046, Jul. 2021, doi: 10.1016/j.iswa.2021.200046.

P. SARAGIOTIS and N. PAPAMARKOS, “LOCAL SKEW CORRECTION IN DOCUMENTS,” Int. J. Pattern Recognit. Artif. Intell., vol. 22, no. 04, pp. 691–710, Jun. 2008, doi: 10.1142/S0218001408006417.

Narayan, Vipul, A. K. Daniel, and Pooja Chaturvedi. "E-FEERP: Enhanced Fuzzy based Energy Efficient Routing Protocol for Wireless Sensor Network." Wireless Personal Communications (2023): 1-28.

Narayan, Vipul, et al. "FuzzyNet: Medical Image Classification based on GLCM Texture Feature." 2023 International Conference on Artificial Intelligence and Smart Communication (AISC). IEEE, 2023.

Narayan, Vipul, et al. "Deep Learning Approaches for Human Gait Recognition: A Review." 2023 International Conference on Artificial Intelligence and Smart Communication (AISC). IEEE, 2023.

Narayan, Vipul, and A. K. Daniel. "FBCHS: Fuzzy Based Cluster Head Selection Protocol to Enhance Network Lifetime of WSN." ADCAIJ: Advances in Distributed Computing and Artificial Intelligence Journal 11.3 (2022): 285-307. [29]

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.