Enhancing Text-to-Image Synthesis with an Improved Semi-Supervised Image Generation Model Incorporating N-Gram, Enhanced TF-IDF, and BOW Techniques

Keywords:

GAN, Deep CNN improvements, text-to-image generation, TF-IDF, NICAbstract

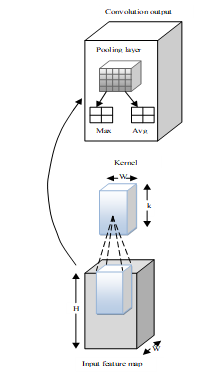

In order to create photographic images from semantic text descriptions with high resolution and visual integrity, text-to-image synthesis is used. But if the resolution of the images is increased, network complexity and processing needs become more difficult. To generate high-resolution images, existing models use huge parameter networks and computationally expensive methods, which leads to unstable training processes and expensive training. In this research, we propose a novel semi-supervised image generation model (SIGTI) that uses both labelled and unlabeled datasets for text-to-image conversion. The labelled dataset, which contains text and associated images, is used throughout the training phase. From the text data, we extract features including N-grams, better TF-IDFs, and BOWs. These features are then used to train the feature set of the NIC semi-supervised image creation model, which combines an upgraded GAN and Deep CNN. The unlabeled dataset with simply text is used during the testing phase. In order to create the appropriate relevant image, we extract the N-gram, enhanced TF-IDF, and BOW features from the text and compare them with the trained features using the proposed NIC model. To determine how well our suggested model works, we evaluate it thoroughly and compare its performance to other established methods.

Downloads

References

Zhang, M., Li, C. & Zhou, Z, “Text to image synthesis using multi-generator text conditioned generative adversarial networks”, Multimed Tools Appl., vol. 80, pp. 7789–7803, 2021.

https://doi.org/10.1007/s11042-020-09965-5

Fang, F., Luo, F., Zhang, HP., Hua-Jian Zhou, Alix L. H. Chow & Chun-Xia Xiao,"A Comprehensive Pipeline for Complex Text-to-Image Synthesis", J. Comput. Sci. Technol., vol. 35, pp. 522–537, 2020. https://doi.org/10.1007/s11390-020-0305-9

Mao, F., Ma, B., Chang, H., Shiguang Shan & Xilin Chen, "Learning efficient text-to-image synthesis via interstage cross-sample similarity distillation", Sci. China Inf. Sci., vol. 64, Article num. 120102, 2021. https://doi.org/10.1007/s11432-020-2900-x

Mourad Bahani, Aziza El Ouaazizi,and Khalil Maalmi, "AraBERT and DF-GAN fusion for Arabic text-to-image generation", Array, vol.16, 2022.

https://doi.org/10.1016/j.array.2022.100260

Zhenxing Zhang , and Lambert Schomaker, "DiverGAN: An Efficient and Effective Single-Stage Framework for Diverse Text-to-Image Generation", Neurocomputing, vol. 473, pp. 182-198, 2022. https://doi.org/10.1016/j.neucom.2021.12.005

Yanlong Dong, Ying Zhang, Lin Ma, Zhi Wang, and Jiebo Luo, "Unsupervised text-to-image synthesis", Pattern Recognition, vol.110, 2021.

https://doi.org/10.1016/j.patcog.2020.107573

Dunlu Peng, Wuchen Yang, Cong Liu, and Shuairui Lu, "SAM-GAN: Self-Attention supporting Multi-stage Generative Adversarial Networks for text-to-image synthesis", Neural Networks, vol. 138, pp. 57-67, 2021.

https://doi.org/10.1016/j.neunet.2021.01.023

Min Wang, Congyan Lang, Songhe Feng, Tao Wang, Yi Jin, and Yidong Li, "Text to photo-realistic image synthesis via chained deep recurrent generative adversarial network", Journal of Visual Communication and Image Representation, Vol. 74, 2021, 102955.

https://doi.org/10.1016/j.jvcir.2020.102955

Lianli Gao, Daiyuan Chen, Zhou Zhao, Jie Shao, and Heng Tao Shen, "Lightweight dynamic conditional GAN with pyramid attention for text-to-image synthesis", Pattern Recognition, vol.110, 2021. https://doi.org/10.1016/j.patcog.2020.107384

S. Naveen, M. S. S Ram Kiran, M. Indupriya, T.V. Manikanta, and P.V. Sudeep, "Transformer models for enhancing AttnGAN based text to image generation", Image and Vision Computing, vol.115, 2021. https://doi.org/10.1016/j.imavis.2021.104284

Ailin Li, Lei Zhao, Zhiwen Zuo, Zhizhong Wang, Haibo Chen, Dongming Lu, and Wei Xing, "Diversified text-to-image generation via deep mutual information estimation", Computer Vision and Image Understanding, vol.211, 2021.

https://doi.org/10.1016/j.cviu.2021.103259

Sharad Pande, Srishti Chouhan, Ritesh Sonavane, Rahee Walambe, George Ghinea, and Ketan Kotecha, "Development and deployment of a generative model-based framework for text to photorealistic image generation", Neurocomputing, vol.463, 2021.

https://doi.org/10.1016/j.neucom.2021.08.055

Zhongjian Qi, Jun Sun, Jinzhao Qian, Jiajia Xu, and Shu Zhan, "PCCM-GAN: Photographic Text-to-Image Generation with Pyramid Contrastive Consistency Model", Neurocomputing, vol. 449, 2021. https://doi.org/10.1016/j.neucom.2021.03.059

Qingrong Cheng, and Xiaodong Gu, "Cross-modal Feature Alignment based Hybrid Attentional Generative Adversarial Networks for text-to-image synthesis", Digital Signal Processing, vol. 107, 2020. https://doi.org/10.1016/j.dsp.2020.102866

M. Z. Hossain, F. Sohel, M. F. Shiratuddin, H. Laga and M. Bennamoun, "Text to Image Synthesis for Improved Image Captioning," in IEEE Access, vol. 9, pp. 64918-64928, 2021,

doi: 10.1109/ACCESS.2021.3075579.

Xue, Y., Guo, YC., Zhang, H., Tao Xu, Song-Hai Zhang & Xiaolei Huang, "Deep image synthesis from intuitive user input: A review and perspectives", Comp. Visual Media, vol. 8, pp. 3–31, 2022. https://doi.org/10.1007/s41095-021-0234-8

Zakraoui, J., Saleh, M., Al-Maadeed, S., & Jihad Mohammed Jaam, "Improving text-to-image generation with object layout guidance", Multimed Tools Appl., vol. 80, pp. 27423–27443, 2021. https://doi.org/10.1007/s11042-021-11038-0

Goar, D. V. . (2021). Biometric Image Analysis in Enhancing Security Based on Cloud IOT Module in Classification Using Deep Learning- Techniques. Research Journal of Computer Systems and Engineering, 2(1), 01:05. Retrieved from https://technicaljournals.org/RJCSE/index.php/journal/article/view/9

J. Ni, S. Zhang, Z. Zhou, J. Hou and F. Gao, "Instance Mask Embedding and Attribute-Adaptive Generative Adversarial Network for Text-to-Image Synthesis," in IEEE Access, vol. 8, pp. 37697-37711, 2020,

doi: 10.1109/ACCESS.2020.2975841.

M. Yuan and Y. Peng, "Bridge-GAN: Interpretable Representation Learning for Text-to-Image Synthesis," in IEEE Transactions on Circuits and Systems for Video Technology, vol. 30, no. 11, pp. 4258-4268, Nov. 2020,

doi: 10.1109/TCSVT.2019.2953753.

M. Yuan and Y. Peng, "CKD: Cross-Task Knowledge Distillation for Text-to-Image Synthesis," in IEEE Transactions on Multimedia, vol. 22, no. 8, pp. 1955-1968, Aug. 2020,

doi: 10.1109/TMM.2019.2951463.

Cheng Cheng, Chunping Li, Youfang Han, and Yan Zhu,"A semi-supervised deep learning image caption model based on Pseudo Label and N-gram", International Journal of Approximate Reasoning, vol. 131, 2021. https://doi.org/10.1016/j.ijar.2020.12.016

R. K. Roul, J. K. Sahoo and K. Arora, "Modified TF-IDF Term Weighting Strategies for Text Categorization," 2017 14th IEEE India Council International Conference (INDICON), Roorkee, India, 2017, pp. 1-6, doi: 10.1109/INDICON.2017.8487593.

Ashok Kumar, L. ., Jebarani, M. R. E. ., & Gokula Krishnan, V. . (2023). Optimized Deep Belief Neural Network for Semantic Change Detection in Multi-Temporal Image. International Journal on Recent and Innovation Trends in Computing and Communication, 11(2), 86–93. https://doi.org/10.17762/ijritcc.v11i2.6132

Soumya S., and Pramod K.V., "Sentiment analysis of malayalam tweets using machine learning techniques", ICT Express, vol. 6, 2020. https://doi.org/10.1016/j.icte.2020.04.003

Divya Saxena, and Jiannong Cao, "Generative Adversarial Networks (GANs): Challenges, Solutions, and Future Directions", 2020.

Ivars Namatevs, "Deep Convolutional Neural Networks: Structure, Feature Extraction and Training ", Information Technology and Management Science, vol. 20, pp. 40-47, 2017.

doi: 10.1515/itms-2017-0007

https://neptune.ai/blog/gan-loss-functions

https://nextjournal.com/jbowles/n-gram-models-part 1#:~:text=An%20n %2Dgram%20model%20is,rich%20pattern%20discovery%20in%20text.&text=In%20other%20words%2C%20it%20tries,or%20words%20near%20each%20other).

Vamsidhar Talasila and M. R. Narasingarao, "BI-LSTM Based Encoding and GAN for Text-to-Image Synthesis", Sensing and Imaging, vol.23, 2022.

Vamsidhar Talasila, Narasingarao M R , Murali Mohan V, "Optimized GAN for Text-to-Image Synthesis: Hybrid Whale Optimization Algorithm and Dragonfly Algorithm", Advances in Engineering Software, vol.173, 2022.

Ghazaly, N. M. . (2020). Secure Internet of Things Environment Based Blockchain Analysis. Research Journal of Computer Systems and Engineering, 1(2), 26:30. Retrieved from https://technicaljournals.org/RJCSE/index.php/journal/article/view/8

Vamsidhar Talasila, M. R. Narasingarao, and V. Murali Mohan, "Modified GAN with Proposed Feature Set for Text-to-Image Synthesis", International Journal of Pattern Recognition and Artificial Intelligence, 2023.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.