Hand Gestures Robotic Control Based on Computer Vision

Keywords:

Computer vision, Machine learning, MediaPipe, Hand landmarksAbstract

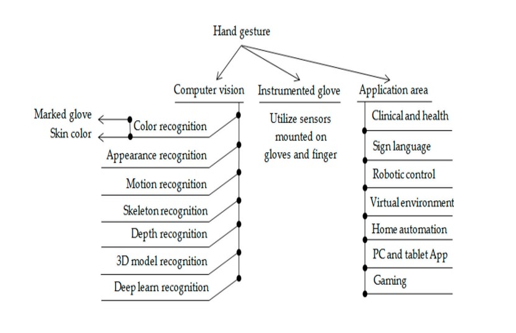

Hand gestures are considered one of the most important and simple ways of communicating between people and robots, especially for humans who suffer from speech and hearing difficulties (the deaf and dumb). Sign language (hand gestures) is used to communicate with them. In this research, the proposed system consists of two parts: The first part is the detection and classification of hand gestures in real time using computer vision technology; this is done by machine learning, specifically the MediaPipe algorithm. The MediaPipe algorithm consists of three sections: the first is the detection of the palm of the hand; the second is identifying 21 points 3D on the palm; and the third is the classification of hand gestures, which is done by comparison between the dimensions of those points. The second part, which depends on the first part, stipulates, after detecting and classifying the hand gestures, the system controls the robot through hand gestures, as each hand gesture has a specific movement that the robot performs. The experimental results showed through the effect of environmental elements such as light intensity, distance, and tilt angle (between hand gesture and camera) that the proposed system can perform well in controlling the movement of the robot through hand gestures.

Downloads

References

F. Hardan and A. R. J. Almusawi, “Developing an Automated Vision System for Maintaing Social Distancing to Cure the Pandemic,” Al-Khwarizmi Eng. J., vol. 18, no. 1, pp. 38–50, 2022, doi: 10.22153/kej.2022.03.002.

Y. G. Khidhir and A. H. Morad, “Comparative Transfer Learning Models for End-to-End Self-Driving Car,” Al-Khwarizmi Eng. J., vol. 18, no. 4, pp. 45–59, 2022, doi: 10.22153/kej.2022.09.003.

M. K. Ahuja and A. Singh, “Static vision based Hand Gesture recognition using principal component analysis,” Proc. 2015 IEEE 3rd Int. Conf. MOOCs, Innov. Technol. Educ. MITE 2015, pp. 402–406, 2016, doi: 10.1109/MITE.2015.7375353.

R. Kramer, C. Majidi, R. Sahai, R. J. Wood, and R. K. Kramer, “Soft curvature sensors for joint angle proprioception Printed-Circuit MEMS Fabrication of Sensors View project Soft Curvature Sensors for Joint Angle Proprioception,” pp. 1919–1926, 2011, [Online]. Available: https://www.researchgate.net/publication/221064831.

E. Jespersen and M. R. Neuman, “Thin film strain gauge angular displacement sensor for measuring finger joint angles,” IEEE/Engineering Med. Biol. Soc. Annu. Conf., vol. 10, no. pt2, p. 807, 1988, doi: 10.1109/iembs.1988.95058.

E. Fujiwara, M. F. M. Dos Santos, and C. K. Suzuki, “Flexible optical fiber bending transducer for application in glove-based sensors,” IEEE Sens. J., vol. 14, no. 10, pp. 3631–3636, 2014, doi: 10.1109/JSEN.2014.2330998.

S. B. Shrote, M. Deshpande, P. Deshmukh, and S. Mathapati, “Assistive Translator for Deaf & Dumb People,” Int. J. Electron. Commun. Comput. Eng., vol. 5, no. 4, pp. 86–89, 2014.

H. P. Gupta, H. S. Chudgar, S. Mukherjee, T. Dutta, and K. Sharma, “A Continuous Hand Gestures Recognition Technique for Human-Machine Interaction Using Accelerometer and Gyroscope Sensors,” IEEE Sens. J., vol. 16, no. 16, pp. 6425–6432, 2016, doi: 10.1109/JSEN.2016.2581023.

M. Oudah, A. Al-Naji, and J. Chahl, “Hand Gesture Recognition Based on Computer Vision: A Review of Techniques,” J. Imaging, vol. 6, no. 8, 2020, doi: 10.3390/JIMAGING6080073.

M. Al-Hammadi et al., “Deep learning-based approach for sign language gesture recognition with efficient hand gesture representation,” IEEE Access, vol. 8, pp. 192527–192542, 2020, doi: 10.1109/ACCESS.2020.3032140.

Y. S. Tan, K. M. Lim, and C. P. Lee, “Hand gesture recognition via enhanced densely connected convolutional neural network,” Expert Syst. Appl., vol. 175, no. November 2020, p. 114797, 2021, doi: 10.1016/j.eswa.2021.114797.

Prasad, A. K. ., M, D. K. ., Macedo, V. D. J. ., Mohan, B. R. ., & N, A. P. . (2023). Machine Learning Approach for Prediction of the Online User Intention for a Product Purchase. International Journal on Recent and Innovation Trends in Computing and Communication, 11(1s), 43–51. https://doi.org/10.17762/ijritcc.v11i1s.5992

A. G. Mahmoud, A. M. Hasan, and N. M. Hassan, “Convolutional neural networks framework for human hand gesture recognition,” Bull. Electr. Eng. Informatics, vol. 10, no. 4, pp. 2223–2230, 2021, doi: 10.11591/EEI.V10I4.2926.

H. Y. Chung, Y. L. Chung, and W. F. Tsai, “An efficient hand gesture recognition system based on deep CNN,” Proc. IEEE Int. Conf. Ind. Technol., vol. 2019-Febru, pp. 853–858, 2019, doi: 10.1109/ICIT.2019.8755038

T. B. Waskito, S. Sumaryo, and C. Setianingsih, “Wheeled Robot Control with Hand Gesture based on Image Processing,” Proc. - 2020 IEEE Int. Conf. Ind. 4.0, Artif. Intell. Commun. Technol. IAICT 2020, pp. 48–54, 2020, doi: 10.1109/IAICT50021.2020.9172032.

P. N. Huu, Q. T. Minh, and H. L. The, “An ANN-based gesture recognition algorithm for smart-home applications,” KSII Trans. Internet Inf. Syst., vol. 14, no. 5, pp. 1967–1983, 2020, doi: 10.3837/tiis.2020.05.006.

Wiling, B. (2021). Locust Genetic Image Processing Classification Model-Based Brain Tumor Classification in MRI Images for Early Diagnosis. Machine Learning Applications in Engineering Education and Management, 1(1), 19–23. Retrieved from http://yashikajournals.com/index.php/mlaeem/article/view/6

F. Zhang et al., “MediaPipe Hands: On-device Real-time Hand Tracking,” 2020, [Online]. Available: http://arxiv.org/abs/2006.10214.

D. A. Taban, A. Al-Zuky, S. H. Kafi, A. H. Al-Saleh, and H. J. Mohamad, “Smart Electronic Switching (ON/OFF) System Based on Real-time Detection of Hand Location in the Video Frames,” J. Phys. Conf. Ser., vol. 1963, no. 1, 2021, doi: 10.1088/1742-6596/1963/1/012002.

B. J. Boruah, A. K. Talukdar, and K. K. Sarma, “Development of a Learning-aid tool using Hand Gesture Based Human Computer Interaction System,” 2021 Adv. Commun. Technol. Signal Process. ACTS 2021, pp. 2–6, 2021, doi: 10.1109/ACTS53447.2021.9708354.

“Raspberry Pi OS.” https://www.raspberrypi.com/software/ (accessed Dec. 10, 2022)

GitHub, “MediaPipe on GitHub.” https://google.github.io/mediapipe/solutions/hands (accessed Sep. 20, 2022).

R. E. Valentin Bazarevsky and Fan Zhang, “On-Device, Real-Time Hand Tracking with MediaPipe,” MONDAY, AUGUST 19, 2019. https://ai.googleblog.com/2019/08/on-device-real-time-hand-tracking-with.html (accessed Oct. 01, 2022).

C. Lugaresiet al., “MediaPipe: A Framework for Perceiving and Processing Reality,” Google Res., pp. 1–4, 2019.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.