Deep Learning Approaches for Speech Command Recognition in a Low Resource KUI Language

Keywords:

Speech Recognition, MFCC, KUI language, Attention using LSTM, Deep Neural NetworkAbstract

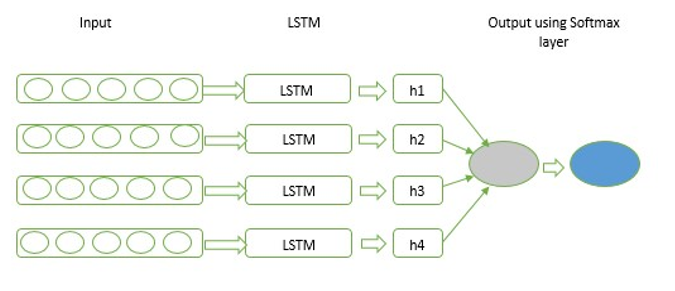

Over the time, computers can learn to understand speech from experience, thanks to incredible recent advances in deep learning algorithms. Speech command recognition becomes necessary when it comes to assisting disabled and impaired people, and executing hands-free activities in the sector of customer service and education. Speech recognition combines multiple disciplines from computer science to identify speech patterns. Identifying speech patterns help computers differentiate between various instructions for which it has been trained to perform. This research aims to implement speech command recognition technology into gaming, assisting players to play games in their native language. Speech recognition technology can be used as a way to engage with the various situations presented in video games, enabling a greater degree of immersion than what is possible through AR (Augmented Reality) and VR (Virtual Reality) technologies on their own. This research introduces various deep learning algorithms and their comparative analysis that can be applied to process speech commands, particularly in the KUI language. An in-depth analysis of the feature extraction techniques like Mel-frequency cepstral coefficient (MFCC) and deep learning algorithms such as Artificial Neural Networks (ANN), Recurrent Neural Networks (RNN), Convolutional Neural Networks (CNN) and Attention using LSTM have been presented. Various experiments are conducted to compare the performance metrics obtained from all the models applied.

Downloads

References

Zhang. Z, et al., “Deep learning for environmentally robust speech recognition: An overview of recent developments,” ACM Trans. Intell. Syst.Technol. vol.9, pp. 1–28, 2018, doi: 10.1145/3178115.

Warden. P., “Speech Commands: A Dataset for Limited Vocabulary Speech Recognition,” 2018, doi: 10.48550/arXiv.1804.03209.

Abate .ST, Tachbelie .MY, Schultz .T, “Deep neural networks based automatic speech recognition for four Ethiopian languages,” IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 8274- 8278, 2020 May 4, doi: 10.1109/ICASSP40776.2020.9053883.

Shetty. Vishwas M., NJ.Matilda Sagaya, “Improving the performance of transformer based low resource speech recognition for Indian languages,” IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 8279- 8283, 2020 May 4, doi: 10.1109/ICASSP40776.2020.9053808.

Islam Mohammad Shakirul, Foysal Ferdouse Ahmed, Neehal Nafis, Karim Enamul, Hossain Syed Akhter, “InceptB: A CNN Based Classification Approach for Recognizing Traditional Bengali Games,”Procedia Computer Science, Vol. 143, pp. 595-602, 2018, doi: 10.1016/j.proc.2018.10.436.

Sun Xiusong, Yang Qun, Liu Shaohan, Yuan Xin, “Improving low-resource speech recognition based on improved NN-HMM structures,” IEEE Access, pp. 73005-14, 2020 Apr 16, doi: 10.1109/ACCESS.2020.2988365.

Ghandoura A., Hjabo F., and Dakkak O. Al, “Building and benchmarking an Arabic Speech Commands dataset for small footprint keyword spotting,” Eng. Appl. Artif. Intell. vol. 102, 2021, doi: 10.1016/j.engappai.2021.104267.

Amoh Justice and Odame Kofi M., “An Optimized Recurrent Unit for Ultra-Low-Power Keyword Spotting,” Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies., vol. 3 of 2, pp. 1–17, June 2019, doi:10.1145/3328907.

Guiming Du, Xia Wang, Guangyan Wang & Dan Li, “Speech recognition based on convolutional neural networks,” IEEE International Conference on Signal and Image Processing (ICSIP), 2016, doi: 10.1109/SIPROCESS.2016.7888355.

Li Xuejiao, and Zhou Zixuan, “Speech Command Recognition with Convolutional Neural Network,” IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). 2020.

Yang S, Gong Z., Ye K., Wei Y., Huang Z., “Edge RNN: A Compact Speech Recognition Network with Spatio-Temporal Features for Edge Computing,” IEEE Access, vol. 8, pp. 81468-81478, 2020, doi: 10.1109/ACCESS.2020.2990974.

Zhang Yundong, Suda Naveen, Lai Liangzhen and Chandra V., “Hello Edge: Keyword Spotting on Microcontrollers,” 2017, doi: 10.48550/arXiv.1711.07128.

Gupta D, Hossain E, Hossain M. S, Andersson K, and Hossain S, “A digital personal assistant using bangla voice command recognition and face detection,” IEEE International Conference on Robotics, Automation, Artificial-intelligence and Internet-of-Things (RAAICON). , pp. 116–121, 2019, doi: 10.1109/RAAICON48939.2019.47.

Hung Phan Duy, Giang T. M, Nam L. et al., “Vietnamese speech command recognition using recurrent neural networks,” International Journal of Advanced Computer Science and Applications (IJACSA), vol. 10, no. 7, 2019, doi: 10.14569/IJACSA.2019.0100728.

Nguyen Q. H and Cao T. D, “A novel method for recognizing vietnamese voice commands on smartphones with support vector machine and convolutional neural networks,” Wireless Communications and Mobile Computing, vol. 2020, 2020, doi: 10.1155/2020/2312908.

Shuvo M, Shahriyar S. A, and Akhand M., “Bangla numeral recognition from speech signal using convolutional neural network,” International Conference on Bangla Speech and Language Processing (ICBSLP). , pp. 1–4, 2019, doi: 10.1109/ICBSLP47725.2019.201540.

Sumon S. A, Chowdhury J, Debnath S, Mohammed N, and Momen S, “Bangla short speech commands recognition using convolutional neural networks,” International Conference on Bangla Speech and Language Processing (ICBSLP). , pp. 1–6, 2018, doi: 10.1109/ICBSLP.2018.8554395.

Shan C, Weng C, Wang G and Xie L, “Investigating end-to-end speech recognition for mandarin-english codeswitching,” International Conference on Acoustics, Speech and Signal Processing (ICASSP). pp. 6056–6060, 2019, doi: 10.1109/ICASSP.2019.8682850.

Nassif A. B, Shahin I, Attili I, Azzeh M, and Shaalan K, “Speech recognition using deep neural networks: A systematic review,” IEEE Access, vol. 7, pp. 19143–19165, 2019, doi: 10.1109/ACCESS.2019.2896880.

Cong Guojing, Kingsbury B, Yang C-C, Liu T, “Fast Training of Deep Neural Networks for Speech Recognition,” International Conference on Acoustics, Speech and Signal Processing (ICASSP),pp.6884-6888, 2020, doi: 10.1109/ICASSP40776.2020.9053993.

Solovyev R. A, Vakhrushev M., Radionov A., Romanova I. and Shvets A. A, “Deep learning approaches for understanding simple speech commands,” IEEE 40th International Conference on Electronics and Nanotechnology (ELNANO)., pp.688–693,2020,doi: 0.1109/ELNANO50318.2020.9088863.

Hamid O. Abdel, Mohamed A. R, Jiang H, Deng L, Penn G, and Yu D, “Convolutional neural networks for speech recognition,” IEEE/ACM Transactions on audio, speech, and language processing, vol. 22, no. 10, pp. 1533–1545, 2014, doi: 10.1109/TASLP.2014.2339736.

Zazo R, Nidadavolu P. Sankar, Chen N, Rodriguez J. Gonzalez, and Dehak N, “Age Estimation in Short Speech Utterances Based on LSTM Recurrent Neural Networks,” IEEE Access, vol.6,pp.22524–22530,2018.

Shan C, Zhang J, Wang Y, and Xie L, “Attention-based end-to-end models for small-footprint keyword spotting,” Proc. 19th Annu. Conf. Int. Speech Commun. Assoc., pp. 2037–2041, 2018, doi: 10.48550/arXiv.1803.10916.

Leo Sabato, Viana Martin Loesener Da Silva, and Bernkopf Christoph, “A Neural Attention Model for Speech Command Recognition,” Engineering Applications of Artificial Intelligence, August 2018, doi: 10.48550/arXiv.1808.08929

Berg Axel, Connor Mark O, and Cruz Miguel Tairum, “Keyword transformer: A self-attention model for keyword spotting,”Proc. Interspeech 2021, pp. 4249-4253, 2021, doi:10.48550/arXiv.2104.00769.

Namrata D, ‘‘Feature extraction methods LPC, PLP and MFCC in speech recognition,’’ Int. J. for advance Res. Eng. Technol., vol. 1, no. 6, pp. 1–4,2013

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, and Duchesnay Edouard, “Scikit-learn: Machine ´ learning in python,” Journal of Machine Learning Research, vol. 12, pp. 2825-2830, 2012, doi: 10.48550/arXiv.1201.0490.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.