Image Captioning Using ResNet RS and Attention Mechanism

Keywords:

CNN (Convolutional Neural Network), Deep learning, Gradient descent, LSTM (Long Short Term Memory), Attention Mechanism, NLTK (Natural Language Toolkit), ResNet, RNN (Recurrent Neural Networks)Abstract

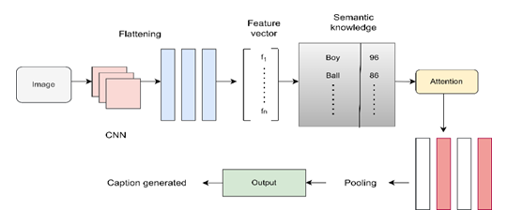

An enormous difficulty in the field of natural language processing is the creation of automatic image captions. The majority of researchers have used convolutional neural networks as encoders and recurrent neural networks as decoders. However, a model must be able to identify the semantic relationships between the many items visible in an image in order to correctly predict image captions. Encoder and decoder states of the attention mechanism are linearly integrated to emphasize both types of data by combining visual information from the image and semantic information from the caption. When attempting to predict captions for a particular image, we made use of a convolutional neural network that had been previously trained called ResNetRS101 in conjunction with the Bahdanau attention mechanism. To evaluate the manner in which the ResNetRS101 Model and the Bahdanau mechanism of attention perform on the same dataset in comparison to the conventional CNN-LSTM technique, this is our primary objective.

Downloads

References

P. Wang et al., “OFA: Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework,” Feb. 2022, Accessed: May 23, 2023. [Online]. Available: https://arxiv.org/abs/2202.03052v2

T. Y. Hsu, C. L. Giles, and T. H. Huang, “SciCap: Generating Captions for Scientific Figures,” Findings of the Association for Computational Linguistics, Findings of ACL: EMNLP 2021, pp. 3258–3264, 2021, doi: 10.18653/V1/2021.FINDINGS-EMNLP.277.

M. Z. Hossain, F. Sohel, M. F. Shiratuddin, H. Laga, and M. Bennamoun, “Text to Image Synthesis for Improved Image Captioning,” IEEE Access, vol. 9, pp. 64918–64928, 2021, doi: 10.1109/ACCESS.2021.3075579.

S. Sehgal, J. Sharma, and N. Chaudhary, “Generating Image Captions based on Deep Learning and Natural language Processing,” ICRITO 2020 - IEEE 8th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions), pp. 165–169, Jun. 2020, doi: 10.1109/ICRITO48877.2020.9197977.

H. Jain, J. Zepeda, P. Perez, and R. Gribonval, “Learning a Complete Image Indexing Pipeline,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 4933–4941, Dec. 2018, doi: 10.1109/CVPR.2018.00518.

S. Pang, M. A. Orgun, and Z. Yu, “A novel biomedical image indexing and retrieval system via deep preference learning,” Comput Methods Programs Biomed, vol. 158, pp. 53–69, May 2018, doi: 10.1016/J.CMPB.2018.02.003.

B. Makav and V. Kilic, “A New Image Captioning Approach for Visually Impaired People,” ELECO 2019 - 11th International Conference on Electrical and Electronics Engineering, pp. 945–949, Nov. 2019, doi: 10.23919/ELECO47770.2019.8990630.

Z. Zhang, Q. Wu, Y. Wang, and F. Chen, “High-Quality Image Captioning with Fine-Grained and Semantic-Guided Visual Attention,” IEEE Trans Multimedia, vol. 21, no. 7, pp. 1681–1693, Jul. 2019, doi: 10.1109/TMM.2018.2888822.

S. Alam, P. Raja, and Y. Gulzar, “Investigation of Machine Learning Methods for Early Prediction of Neurodevelopmental Disorders in Children,” Wirel Commun Mob Comput, vol. 2022, 2022, doi: 10.1155/2022/5766386.

F. Sahlan, F. Hamidi, M. Z. Misrat, M. H. Adli, S. Wani, and Y. Gulzar, “Prediction of Mental Health Among University Students,” International Journal on Perceptive and Cognitive Computing, vol. 7, no. 1, pp. 85–91, Jul. 2021, Accessed: May 23, 2023. [Online]. Available: https://journals.iium.edu.my/kict/index.php/IJPCC/article/view/225

[11] S. A. Khan, Y. Gulzar, S. Turaev, and Y. S. Peng, “A Modified HSIFT Descriptor for Medical Image Classification of Anatomy Objects,” Symmetry 2021, Vol. 13, Page 1987, vol. 13, no. 11, p. 1987, Oct. 2021, doi: 10.3390/SYM13111987.

Y. Gulzar and S. A. Khan, “Skin Lesion Segmentation Based on Vision Transformers and Convolutional Neural Networks—A Comparative Study,” Applied Sciences 2022, Vol. 12, Page 5990, vol. 12, no. 12, p. 5990, Jun. 2022, doi: 10.3390/APP12125990.

K. Albarrak, Y. Gulzar, Y. Hamid, A. Mehmood, and A. B. Soomro, “A Deep Learning-Based Model for Date Fruit Classification,” Sustainability 2022, Vol. 14, Page 6339, vol. 14, no. 10, p. 6339, May 2022, doi: 10.3390/SU14106339.

Y. Gulzar, Y. Hamid, A. B. Soomro, A. A. Alwan, and L. Journaux, “A Convolution Neural Network-Based Seed Classification System,” Symmetry 2020, Vol. 12, Page 2018, vol. 12, no. 12, p. 2018, Dec. 2020, doi: 10.3390/SYM12122018.

Y. Hamid, S. Wani, A. B. Soomro, A. A. Alwan, and Y. Gulzar, “Smart Seed Classification System based on MobileNetV2 Architecture,” Proceedings of 2022 2nd International Conference on Computing and Information Technology, ICCIT 2022, pp. 217–222, 2022, doi: 10.1109/ICCIT52419.2022.9711662.

Y. Hamid, S. Elyassami, Y. Gulzar, V. R. Balasaraswathi, T. Habuza, and S. Wani, “An improvised CNN model for fake image detection,” International Journal of Information Technology (Singapore), vol. 15, no. 1, pp. 5–15, Jan. 2023, doi: 10.1007/S41870-022-01130-5.

Gummadi, A. ., & Rao, K. R. . (2023). EECLA: A Novel Clustering Model for Improvement of Localization and Energy Efficient Routing Protocols in Vehicle Tracking Using Wireless Sensor Networks. International Journal on Recent and Innovation Trends in Computing and Communication, 11(2s), 188–197. https://doi.org/10.17762/ijritcc.v11i2s.6044

M. Faris et al., “A Real Time Deep Learning Based Driver Monitoring System,” International Journal on Perceptive and Cognitive Computing, vol. 7, no. 1, pp. 79–84, Jul. 2021, Accessed: May 31, 2023. [Online]. Available: https://journals.iium.edu.my/kict/index.php/IJPCC/article/view/224

H. Sharma and A. S. Jalal, “Incorporating external knowledge for image captioning using CNN and LSTM,” Modern Physics Letters B, vol. 34, no. 28, Oct. 2020, doi: 10.1142/S0217984920503157.

C. Wang, H. Yang, C. Bartz, and C. Meinel, “Image captioning with deep bidirectional LSTMs,” MM 2016 - Proceedings of the 2016 ACM Multimedia Conference, pp. 988–997, Oct. 2016, doi: 10.1145/2964284.2964299.

J. Aneja, A. Deshpande, and A. G. Schwing, “Convolutional Image Captioning,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 5561–5570, Dec. 2018, doi: 10.1109/CVPR.2018.00583.

X. Yang, H. Zhang, and J. Cai, “Learning to collocate neural modules for image captioning,” Proceedings of the IEEE International Conference on Computer Vision, vol. 2019-October, pp. 4249–4259, Oct. 2019, doi: 10.1109/ICCV.2019.00435.

L. Zhou, C. Xu, P. Koch, and J. J. Corso, “Watch what you just said: Image captioning with text-conditional attention,” Thematic Workshops 2017 - Proceedings of the Thematic Workshops of ACM Multimedia 2017, co-located with MM 2017, pp. 305–313, Oct. 2017, doi: 10.1145/3126686.3126717.

K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2016-December, pp. 770–778, Dec. 2016, doi: 10.1109/CVPR.2016.90.

I. Bello et al., “Revisiting ResNets: Improved Training and Scaling Strategies,” Adv Neural Inf Process Syst, vol. 27, pp. 22614–22627, Mar. 2021, Accessed: May 31, 2023. [Online]. Available: https://arxiv.org/abs/2103.07579v1

Ms. Nora Zilam Runera. (2014). Performance Analysis On Knowledge Management System on Project Management. International Journal of New Practices in Management and Engineering, 3(02), 08 - 13. Retrieved from http://ijnpme.org/index.php/IJNPME/article/view/28

H. Maru, T. S. S. Chandana, and D. Naik, “Comparison of Image Encoder Architectures for Image Captioning,” Proceedings - 5th International Conference on Computing Methodologies and Communication, ICCMC 2021, pp. 740–744, Apr. 2021, doi: 10.1109/ICCMC51019.2021.9418234.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.