Conversion of Neutral Speech into Emotional Speech in Hindi Language based on Supra Segmental Features

Keywords:

Emotion Conversion, F0 Contour, Pitch, Prosodic features, Speech processingAbstract

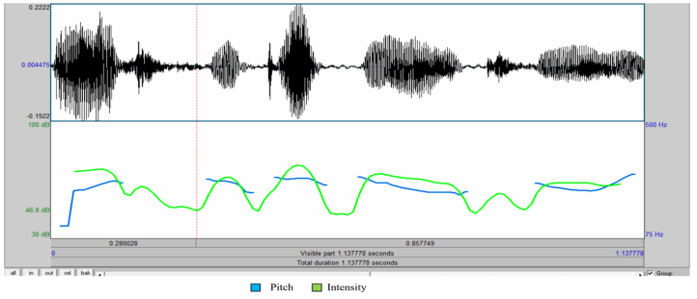

Speech form is becoming a prevalent and imperative practice as interface between a user and computers systems and smart machines. Mode of input and output in computers and other similar devices in the coming era will be dominated by speech form. To add the spontaneity and intelligibility, emotions play expressive role to ordinary speech. Therefore, it is latest emerging area of research. The practice of converting neutral speech into the intended emotional speech involves examining, identifying, and then changing the characteristics of the speech utterance. The functions of prosodic characteristics, in particular the fundamental frequency F0, pitch, and intensity, are studied for various emotions and employed for emotion conversion. This paper discusses a methodology for conversion of emotions from neutral to Happy, Sad and others for Hindi language speech. The algorithm is developed based using Linear Modification Model where direct modifications are done on F0 contours, pitch and duration on the segmented part of the speech. The continuous speech sentence is segmented into different parts such as words and analysis is done for above said parameters between different emotions of the same neutral speech. Based on the analysis these parameters are modified in the target emotion.

Downloads

References

Kadiri, S. R., Gangamohan, P., Gangashetty, S. v., Alku, P., & Yegnanarayana, B. (2020). Excitation Features of Speech for Emotion Recognition Using Neutral Speech as Reference. Circuits, Systems, and Signal Processing, 39(9), 4459–4481. https://doi.org/10.1007/s00034-020-01377-y

Liu, S., Cao, Y., Kang, S., Hu, N., Liu, X., Su, D., Yu, D., & Meng, H. (2020). Transferring Source Style in Non-Parallel Voice Conversion. http://arxiv.org/abs/2005.09178.

Schuller, D. M., & Schuller, B. W. (2021). A Review on Five Recent and Near-Future Developments in Computational Processing of Emotion in the Human Voice. Emotion Review, 13(1), 44–50. https://doi.org/10.1177/1754073919898526

Zhou, K., Sisman, B., Liu, R., & Li, H. (2022). Emotional voice conversion: Theory, databases and ESD. Speech Communication, 137, 1–18. https://doi.org/10.1016/j.specom.2021.11.006A. Agarwal and A. Dev, "Emotion recognition and conversion based on segmentation of speech in Hindi language," 2015 2nd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 2015, pp. 1843-1847.

Kun Zhou, Berrak Sisman, Rajib Rana, Bjorn W. Schuller, Haizhou Li, 2022, “Emotion Intensity and its Control for Emotional Voice Conversion”, arXiv:2201.03967v3 [cs.SD] 18th July 2022.

Jing-Xuan Zhang, Zhen-Hua Ling, Li-Juan Liu, Yuan Jiang, and Li-Rong Dai, “Sequence-to-sequence acoustic modeling for voice conversion,” IEEE/ACM Transactions on Audio, Speech and Language Processing (TASLP), vol. 27, no. 3, pp. 631–644, 2019.

Jing-Xuan Zhang, Zhen-Hua Ling, Yuan Jiang, Li-Juan Liu, Chen Liang, and Li-Rong Dai, “Improving sequence-to-sequence voice conversion by adding text-supervision,” in ICASSP 2019. IEEE, 2019, pp. 6785–6789.

Zhaojie Luo, Zhaojie Luo, Jinhui Chen, Tetsuya Takiguchi, Yasuo Ariki, ‘Neutral-to-emotional voice conversion with cross-wavelet transform F0 using generative adversarial networks’, APSIPA Transactions on Signal and Information Processing , Volume 8 , 2019 , e10 DOI: https://doi.org/10.1017/ATSIP.2019.3

Susmitha Vekkota, Deepa Gupta, ‘Fusion of spectral and prosody modelling for multilingual speech emotion conversion’ , Elsevier, Knowledge-Based Systems Volume 242, 22 April 2022, 108360.

Sandeep Kumar, , MohdAnul Haq, , Arpit Jain, , C. Andy Jason, , Nageswara Rao Moparthi, Nitin Mittal5, Zamil S. Alzamil, ‘Multilayer Neural Network Based Speech Emotion Recognition for Smart Assistance’, Computers, Materials & Continua Tech Science Press, DOI: 10.32604/cmc.2023.028631.

Kreuk, F., Polyak, A., Copet, J., Kharitonov, E., Nguyen, T., Rivière, M., Hsu, W., Mohamed, A., Dupoux, E., & Adi, Y. (2021). Textless Speech Emotion Conversion using Decomposed and Discrete Representations. ArXiv, abs/2111.07402.

Ravi Shankar, Jacob Sager, Archana Venkataraman, Non-parallel Emotion Conversion using a Deep-Generative Hybrid Network and an Adversarial Pair Discriminator, INTERSPEECH 2020, October 25–29, 2020, Shanghai, China.

Singh, J.B., Lehana, P. STRAIGHT-Based Emotion Conversion Using Quadratic Multivariate Polynomial. Circuits Syst Signal Process 37, 2179–2193 (2018). https://doi.org/10.1007/s00034-017-0660-0.

Singh, J.B., Lehana, P. STRAIGHT-Based Emotion Conversion Using Quadratic Multivariate Polynomial. Circuits Syst Signal Process 37, 2179–2193 (2018). https://doi.org/10.1007/s00034-017-0660-0.

Choi, H., & Hahn, M. (2021). Sequence-to-Sequence Emotional Voice Conversion With Strength Control. IEEE Access, 9, 42674-42687.

Vekkot, S., & Gupta, D. (2019). Emotion Conversion in Telugu using Constrained Variance GMM and Continuous Wavelet Transform-$F_{0}$. TENCON 2019 - 2019 IEEE Region 10 Conference (TENCON), 991-996.

Haque, A., & Rao, K.S. (2015). Modification and incorporation of excitation source features for emotion conversion. 2015 International Conference on Computer, Communication and Control (IC4), 1-5.

GurunathReddy, M., & Rao, K.S. (2015). Neutral to happy emotion conversion by blending prosody and laughter. 2015 Eighth International Conference on Contemporary Computing (IC3), 342-347.

Saheer, L., Na, X., & Cernak, M. (2015). Syllabic Pitch Tuning for Neutral-to-Emotional Voice Conversion.

Haque, A., & Rao, K.S. (2015). Analysis and modification of spectral energy for neutral to sad emotion conversion. 2015 Eighth International Conference on Contemporary Computing (IC3), 263-268.

Reddy, M.G., & Rao, K.S. (2017). Neutral to Joyous Happy Emotion Conversion. 2017 14th IEEE India Council International Conference (INDICON), 1-6.James, J., Tian, L., & Watson, C. I. (2018). An open source emotional speech corpus for human robot interaction applications. Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, 2018-September, 2768–2772. https://doi.org/10.21437/Interspeech.2018-1349

Vekkot, S., & Gupta, D. (2019). Prosodic transformation in vocal emotion conversion for multi-lingual scenarios: a pilot study. International Journal of Speech Technology, 22, 533 - 549.

Haque, A., & Rao, K.S. (2016). Modification of energy spectra, epoch parameters and prosody for emotion conversion in speech. International Journal of Speech Technology, 20, 15 - 25.

James, J., Tian, L., & Watson, C. I. (2018). An open source emotional speech corpus for human robot interaction applications. Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, 2018-September, 2768–2772. https://doi.org/10.21437/Interspeech.2018-1349

Alaria, S. K., A. . Raj, V. Sharma, and V. Kumar. “Simulation and Analysis of Hand Gesture Recognition for Indian Sign Language Using CNN”. International Journal on Recent and Innovation Trends in Computing and Communication, vol. 10, no. 4, Apr. 2022, pp. 10-14, doi:10.17762/ijritcc.v10i4.5556.

Vyas, S., Mukhija, M.K., Alaria, S.K. (2023). An Efficient Approach for Plant Leaf Species Identification Based on SVM and SMO and Performance Improvement. In: Kulkarni, A.J., Mirjalili, S., Udgata, S.K. (eds) Intelligent Systems and Applications. Lecture Notes in Electrical Engineering, vol 959. Springer, Singapore. https://doi.org/10.1007/978-981-19-6581-4_1

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.