A Multimodal Architecture with Visual-Level Framework for Virtual Assistant

Keywords:

Interactive virtual personal assistant, , LSTM hand-landmark Gesture recognition, LSTM intent classification chat bot, Multimodal input, System automation, Visual level frameworkAbstract

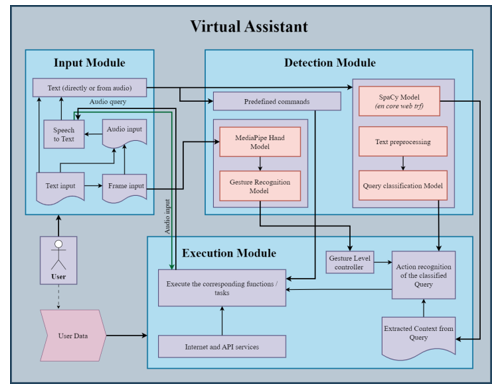

Virtual personal assistants (VPAs) have grown in popularity due to their scalability and accessibility. The development of machine learning (ML) and natural language processing (NLP) have changed how we use technology and the growth of digital assistant technology. VPAs uses artificial intelligence to personalize, simplify, and automate user tasks and use computer vision to recognize visual cues. Consequently, increasing their versatility and functionality across other inputs. The proposed architecture for the VPA is based on two main components that will work in tandem: a chat-like model that can accept text or speech input to help the user and a computer vision framework that consists of multiple levels to enable control and interaction with the computer using visual input. The VPA utilizes NLP and ML algorithms to read and interpret user queries in both audio and text formats. Based on the classification and context of the query, automated actions are taken by the VPA to improve operational efficiency. The innovative computer vision framework lets the user to control the computer without a mouse or keyboard via gestures and level to represent action in the environment. Eventually, improving user experience by adding convenience and effectiveness. Automated tasks include opening particular apps, creating weather reports based on the location, altering screen brightness and system volume, virtual mouse operation, and manage launched applications such as minimize, maximize, or audio-input with hand gestures. Thus, VPAs save time, boost productivity, and enhance use of technology at home and in the workplace.

Downloads

References

Dubiel, M., Halvey, M., & Azzopardi, L. (2018). A survey investigating usage of virtual personal assistants. arXiv preprint arXiv:1807.04606.

Almansor, E. H., & Hussain, F. K. (2020). Survey on intelligent chatbots: State-of-the-art and future research directions. In Complex, Intelligent, and Software Intensive Systems: Proceedings of the 13th International Conference on Complex, Intelligent, and Software Intensive Systems (CISIS-2019) (pp. 534-543). Springer International Publishing.

Samim, A. (2023). A New Paradigm of Artificial Intelligence to Disabilities. International Journal of Science and Research (IJSR), 12(1), 478-482.

Grand View Research. (2021). Intelligent Virtual Assistant Market Size, Share & Trend Analysis Report By Technology (Text-to-Speech, Text-based), By Product (Chatbot, Smart Speaker), By Application (IT & Telecom, Consumer Electronics), And Segment Forecasts, 2023 - 2030 (online). Retrieved from https://www.grandviewresearch.com/industry-analysis/intelligent-virtual-assistant-industry.

Budzinski, O., Noskova, V., & Zhang, X. (2019). The brave new world of digital personal assistants: Benefits and challenges from an economic perspective. NETNOMICS: Economic Research and Electronic Networking, 20, 177-194.

Franken, S., & Wattenberg, M. (2019, October). The impact of AI on employment and organisation in the industrial working environment of the future. In ECIAIR 2019 European Conference on the Impact of Artificial Intelligence and Robotics (Vol. 31). Academic Conferences and publishing limited.

Barwal, R. K. ., Raheja, N. ., Bhiyana, M. ., & Rani, D. . (2023). Machine Learning-Based Hybrid Recommendation (SVOF-KNN) Model For Breast Cancer Coimbra Dataset Diagnosis. International Journal on Recent and Innovation Trends in Computing and Communication, 11(1s), 23–42. https://doi.org/10.17762/ijritcc.v11i1s.5991

Majumder, S., & Mondal, A. (2021). Are chatbots really useful for human resource management?. International Journal of Speech Technology, 1-9.

de Barcelos Silva, A., Gomes, M. M., da Costa, C. A., da Rosa Righi, R., Barbosa, J. L. V., Pessin, G., ... & Federizzi, G. (2020). Intelligent personal assistants: A systematic literature review. Expert Systems with Applications, 147, 113193.

Iannizzotto, G., Bello, L. L., Nucita, A., & Grasso, G. M. (2018, July). A vision and speech enabled, customizable, virtual assistant for smart environments. In 2018 11th International Conference on Human System Interaction (HSI) (pp. 50-56). IEEE.

Kumari, S., Mathesul, S., Shrivastav, P., & Rambhad, A. (2020). Hand gesture-based recognition for interactive human computer using tenser-flow. International Journal of Advanced Science and Technology, 29(7), 14186-14197.

Haria, A., Subramanian, A., Asokkumar, N., Poddar, S., & Nayak, J. S. (2017). Hand gesture recognition for human computer interaction. Procedia computer science, 115, 367-374.

Xu, P. (2017). A real-time hand gesture recognition and human-computer interaction system. arXiv preprint arXiv:1704.07296.

Rautaray, S. S., & Agrawal, A. (2012). Real time multiple hand gesture recognition system for human computer interaction. International Journal of Intelligent Systems and Applications, 4(5), 56-64.

Panwar, M., & Mehra, P. S. (2011, November). Hand gesture recognition for human computer interaction. In 2011 International Conference on Image Information Processing (pp. 1-7). IEEE.

Impana, N. R., & Manjula, G. R. VOICE AND TEXT BASED VIRTUAL PERSONAL ASSISTANT FOR DESKTOP.

Morzelona, R. (2021). Human Visual System Quality Assessment in The Images Using the IQA Model Integrated with Automated Machine Learning Model . Machine Learning Applications in Engineering Education and Management, 1(1), 13–18. Retrieved from http://yashikajournals.com/index.php/mlaeem/article/view/5

Geetha, V., Gomathy, C. K., Vardhan, K. M. S., & Kumar, N. P. (2021). The voice enabled personal assistant for Pc using python. International Journal of Engineering and Advanced Technology, 10, 162-165.

Pandey, A., Vashist, V., Tiwari, P., Sikka, S., & Makkar, P. (2020). Smart voice based virtual personal assistants with artificial intelligence. Artificial Computational Research Society, 1(3).

Sakharkar, A., Tondawalkar, S., Thombare, P., & Sonawane, R. (2021). Python based AI assistant for computer. In Conference on advances in communication and control systems (CAC2S).

Sarda, S., Shah, Y., Das, M., Saibewar, N., & Patil, S. (2017). VPA: Virtual Personal Assistant. International Journal of Computer Applications, 165(1).

Kulhalli, K. V., Sirbi, K., & Patankar, M. A. J. (2017). Personal assistant with voice recognition intelligence. International Journal of Engineering Research and Technology, 10(1), 416-419.

Belattar, K., Mehadjbia, A., Bala, A., & Kechida, A. (2022). An embedded system-based hand-gesture recognition for human-drone interaction. International Journal of Embedded Systems, 15(4), 333-343.

Nigam, A., Sahare, P., & Pandya, K. (2019, January). Intent detection and slots prompt in a closed-domain chatbot. In 2019 IEEE 13th international conference on semantic computing (ICSC) (pp. 340-343). IEEE.

Hochreiter, S., & Schmidhuber, J. (1997). Long short-term memory. Neural computation, 9(8), 1735-1780.

Zhang, F., Bazarevsky, V., Vakunov, A., Tkachenka, A., Sung, G., Chang, C. L., & Grundmann, M. (2020). Mediapipe hands: On-device real-time hand tracking. arXiv preprint arXiv:2006.10214.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.